While delving into service mesh, key-value store, or a service discovery

solution in cloud-native space, you would have definitely come across

Consul. Consul, developed by HashiCorp is a

multi-purpose solution which primarily provides the following features:

This blog post briefly explains what are the deployment patterns for the

Consul to watch configuration changes stored in the Key-Value store. It

will explain how to discover and synchronize with the services running

out of the Kubernetes cluster. We will also see how to enable Service

Mesh feature with Consul. We broadly categorize Consul deployment

patterns as In-cluster patterns (Consul deployed in Kubernetes cluster)

and Hybrid pattern (Consul deployed outside the Kubernetes cluster).

In-cluster deployment patterns

In such deployment patterns, Consul (Server and Agent both) resides in a

Kubernetes cluster. Although, Consul provides many features, we will see

what will the better approach while dealing with Consul Key-Value store.

Briefly speaking Consul Key-Value (KV) provides a simple way to store

namespaced or hierarchical configurations/properties in any form

including string, JSON, YAML, and HCL.

A complementary feature for KV is

watches. As the name

suggests, it watches (monitors) the values of the keys stored in KV.

Watches notify changes occurred in one or more keys. In addition to

this, we can also watch services, nodes, events, etc. Whenever a

notification is received for a configuration change, a ‘handler’ is

called for action to the respective notification. A handler could be an

executable (can be used to invoke the desired action on reception of

notification) or an HTTP endpoint (can be used for health checks).

Depending upon this, we can differentiate Consul Deployment patterns

within a Kubernetes cluster as follows:

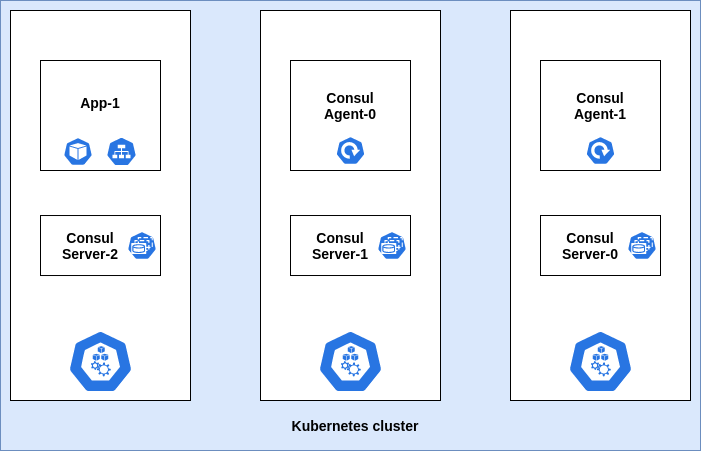

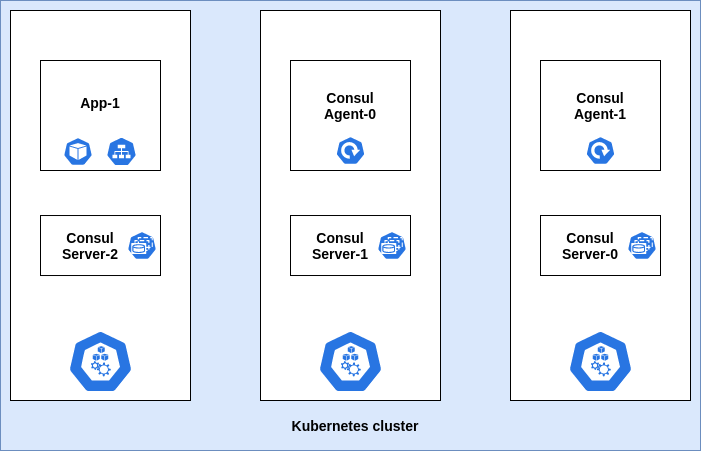

Consul agent as Deployment: Monitor KV

Let us consider an example in which we deploy the Consul server using a

standard helm chart. Consul agent is deployed as separate deployment

with one or more replicas and let us say there is an application that

needs to watch a specific configuration in the KV.

Consul as a Deployment

With proper configuration of Consul watch and handler, application

successfully gets notified on KV changes. Generally, watches are

configured using CLI command consul watch or using JSON file placed in

config directory of Consul agent. A typical watch configuration is as

shown below:

{

"server":false,

"datacenter":"dc",

"data_dir":"/consul/data",

"log_level":"INFO",

"leave_on_terminate":true,

"watches":[

{

"type": "key",

"key": "testKey1",

"handler_type": "http",

"http_handler_config": {

"path":"<Application Endpoint>",

"method": "POST",

"tls_skip_verify": true

}

},

{

"type":"key",

"key":"testKey2",

"handler_type":"script",

"args": ["/scripts/handler.sh"]

},

{

"type":"key",

"key":"testKey2",`

"handler_type":"script",

"args": ["/scripts/handler2"]

}

]

}

From the above snippet you can see that, multiple watches are

configurable at a time. Moreover, you can monitor same KV and and have

separate actions by providing different scripts. Script handler can also

execute any binary.

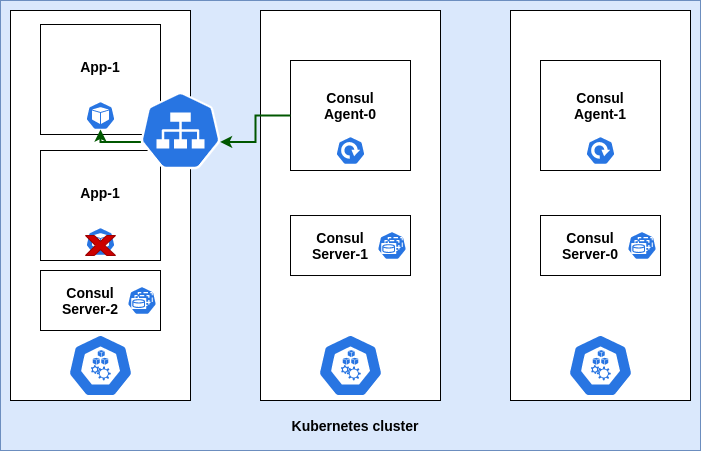

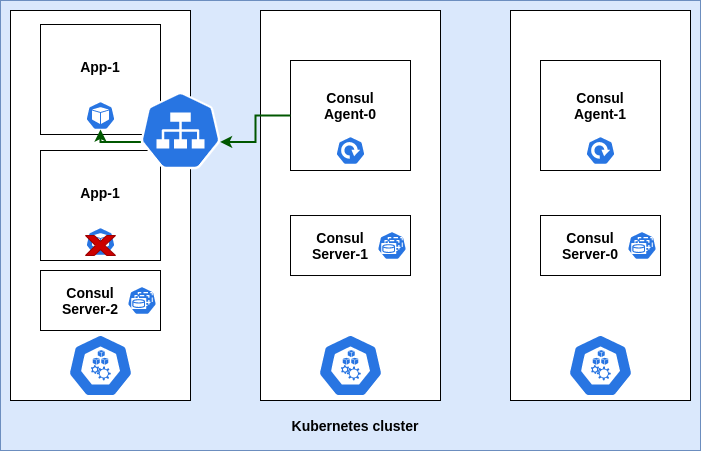

Scaling Application Pods

Now suppose, there is a rise in traffic and the application needs to

scale accordingly. In this

scenario, the newly scaled Pods may not get the KV change notification.

This is due to the fact that the application is being exposed to the

Consul agent via the Kubernetes service. Kubernetes service by default

load balances between the Pods on round-robin (considering user-space

modes) or random (considering iptables mode) basis and thereby, relaying

the incoming traffic to any one of the runnning pods of the application.

Thus, all the running Pods may not receive a notification at once.

Moreover, if you want to take a specific action on receiving Consul

notification, you need to configure a handler script that will reside in

the application Pod. This script in the Pod won’t be accessible to the

Consul agent, as it doesn’t have access to file-system inside the Pod.

Scaling Application ReplicaSet

To summarize, this Consul deployment pattern is useful for low volume

use cases where we just need to monitor KV changes and don’t need to

take any action on its detection. The deployment of Consul agents is

easy to scale whenever required.

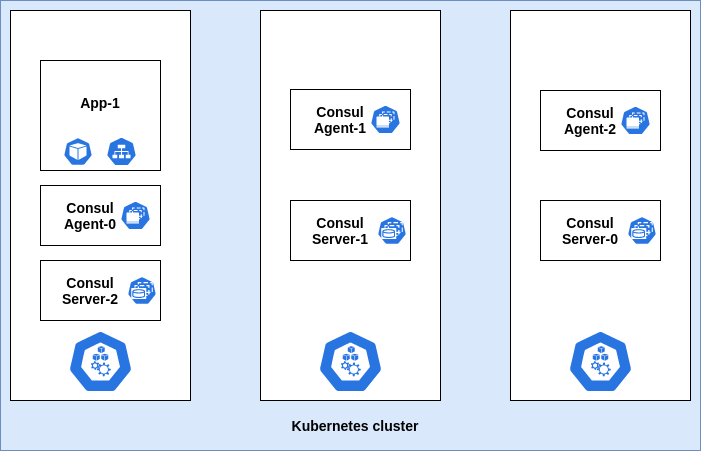

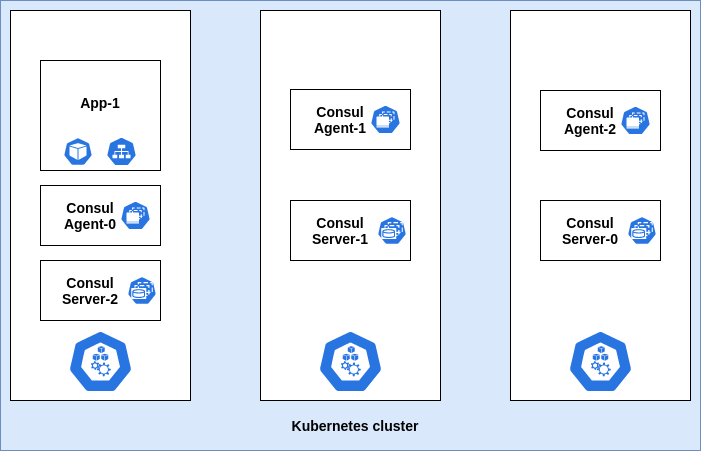

Consul agent as a DaemonSet: Monitor KV

In this scenario, we will use the Consul Helm chart to deploy the Consul

agent as a DaemonSet. These changes can be incorporated by

client.enabled: true and adding Consul watch configuration in

client.extraConfig in values.yaml of Helm chart. Although this

approach is homogenous to the previous deployment pattern, it alleviates

the efforts required to manage the deployment all by yourself.

Consul DaemonSet pattern

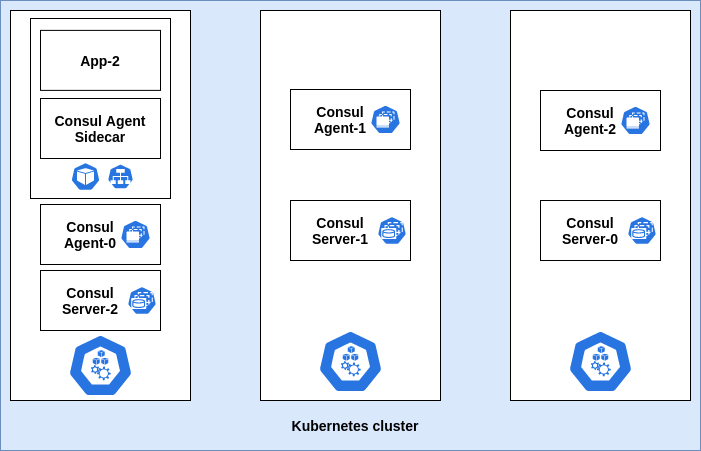

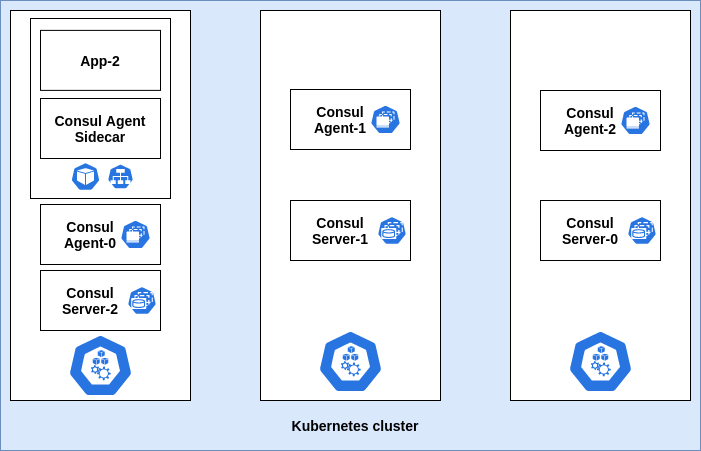

Consul agent as a sidecar: Executable handler script

In this pattern, the Consul agent runs as a sidecar in each Pod. Watch

is configured on this Pod to track changes of the KV and subsequently

notify using the executable handler. In this case, the handler is

pointed to the localhost in contrast to the DaemonSet approach, where

the handler is pointed to a service endpoint or an IP address. Whenever

the Pod terminates or goes down, a new Pod gets created with the same

configuration and joins the Consul cluster to track the same KV changes

again.

Consul Sidecar pattern

Service Sync

Service

sync is a

feature provided by Consul wherein you can sync services running in the

Kubernetes cluster with the services running in the Consul cluster.

There can be one-way sync between Consul to Kubernetes or both ways.

Thus, any node that is the part of Consul cluster accesses Kubernetes

services using Consul DNS. On the contrary, syncing Consul services in

Kubernetes helps them to get accessed using kube-dns, environment

variables, etc., which is a native Kubernetes way. This feature will be

more useful in hybrid patterns.

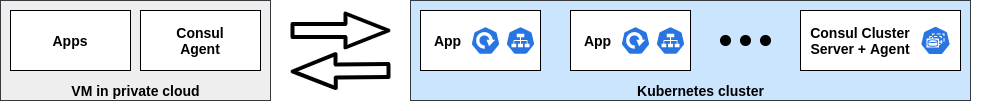

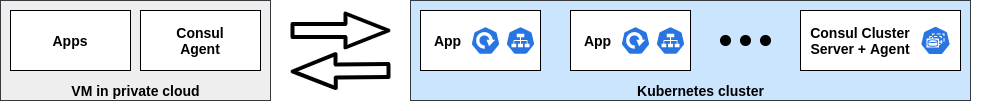

Hybrid deployment patterns

This pattern suits the scenarios where some services of an application

lie in virtual machines (VMs) and remaining services are containerized

and managed by Kubernetes. This scenario is observed while migrating

from legacy to cloud-native workloads. Here, services running in VMs are

discovered and accessed by services running in Kubernetes and

vice-versa. Service discovery in a hybrid pattern is typically carried

out by a feature called Cloud

Auto-join. This

requires appropriate cloud configuration and the rest is taken care of

by Consul. This feature supports all major cloud providers including

AWS, GCP, Azure, etc. To enable the sync between Kubernetes services and

services running on VMs, we need to provide syncCatalog.enabled: true

in Helm chart values whereas service discovery flow can be decided by

syncCatalog.toConsul: true and syncCatalog.toK8s: true. In addition

to this, services are also synced based on namespaces, prefix and tags.

Consul Hybrid Pattern

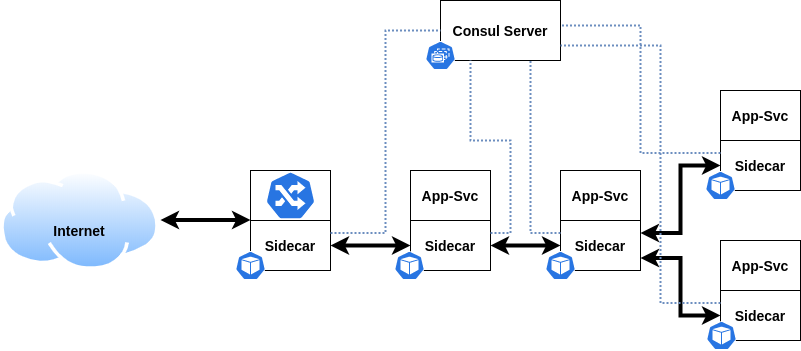

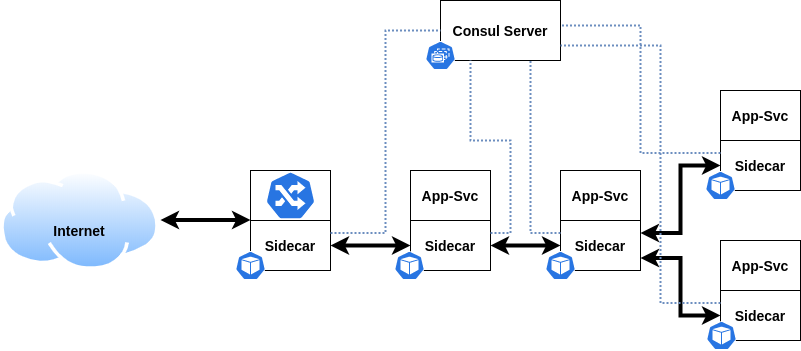

Consul Connect

Connect is a service

mesh feature provided by Consul. In this pattern, Consul adds a sidecar

in each application Pod. Sidecar acts as a proxy for all the inbound and

outbound communication for the application containers inside each Pod.

Sidecar proxy establishes mutual TLS between them. As a result, entire

communication within the service mesh is highly secured. Moreover, the

Connect sidecar acts as a data plane while the Consul cluster acts as a

Control plane. This provides fine control to allow/deny traffic between

the registered services.

Following annotations in deployments enables a consul Service Mesh:

"consul.hashicorp.com/connect-inject": "true"

"consul.hashicorp.com/connect-service-port": "8080"

"consul.hashicorp.com/connect-service-upstreams": "upstream-svc1:port1, upstream-svc2:port2, ..."

connect-inject adds the Consul Connect sidecar in the application Pod.

connect-service-port specifies the port for inbound communication.

connect-service-upstreams specifies the upstreams services to be

connected to the current application service. Port specifies is the

static port opened to listen for the respective communication.

Consul Service Mesh scenario

Looking for help with building your DevOps strategy or want to outsource DevOps to the experts? learn why so many startups & enterprises consider us as one of the best DevOps consulting & services companies.

References

- Deployment Patterns for Consul in

Kubernetes

- https://github.com/infracloudio/consul-kubernetes-patterns

- https://www.consul.io/docs/dynamic-app-config/watches

- https://www.consul.io/docs/k8s/service-sync

- https://www.consul.io/docs/connect

- https://www.consul.io/docs/internals/architecture.html

- https://github.com/hashicorp/consul-helm