How to use Argo Rollouts for A/B Testing in Progressive Delivery

Traditionally, software and application updates were pushed out as one single chunk to the end user. In case anything went wrong with the upgrade, there wasn’t much the engineering team could do since they had little control over the application once it was released. However, with the advent of progressive delivery strategies, teams got finer control over their releases. This made it easier for them to roll back to a previous version quickly in case something went wrong.

Most of the deployment strategies, like Canary and Blue-Green allow us to quickly roll back application versions whilst maintaining different versions of our application at once. Talking about multiple versions, one of them has a feature turned on, and the other one has it turned off. But how do you know which one is better before releasing it to a larger group?

In this blog post, we’ll talk about A/B testing that will allow you to leverage data to help you decide which version is better. We’ll look at what A/B testing is, understand its role in progressive delivery, and also show how A/B testing works with Argo Rollouts using a simple example.

A/B Testing 101

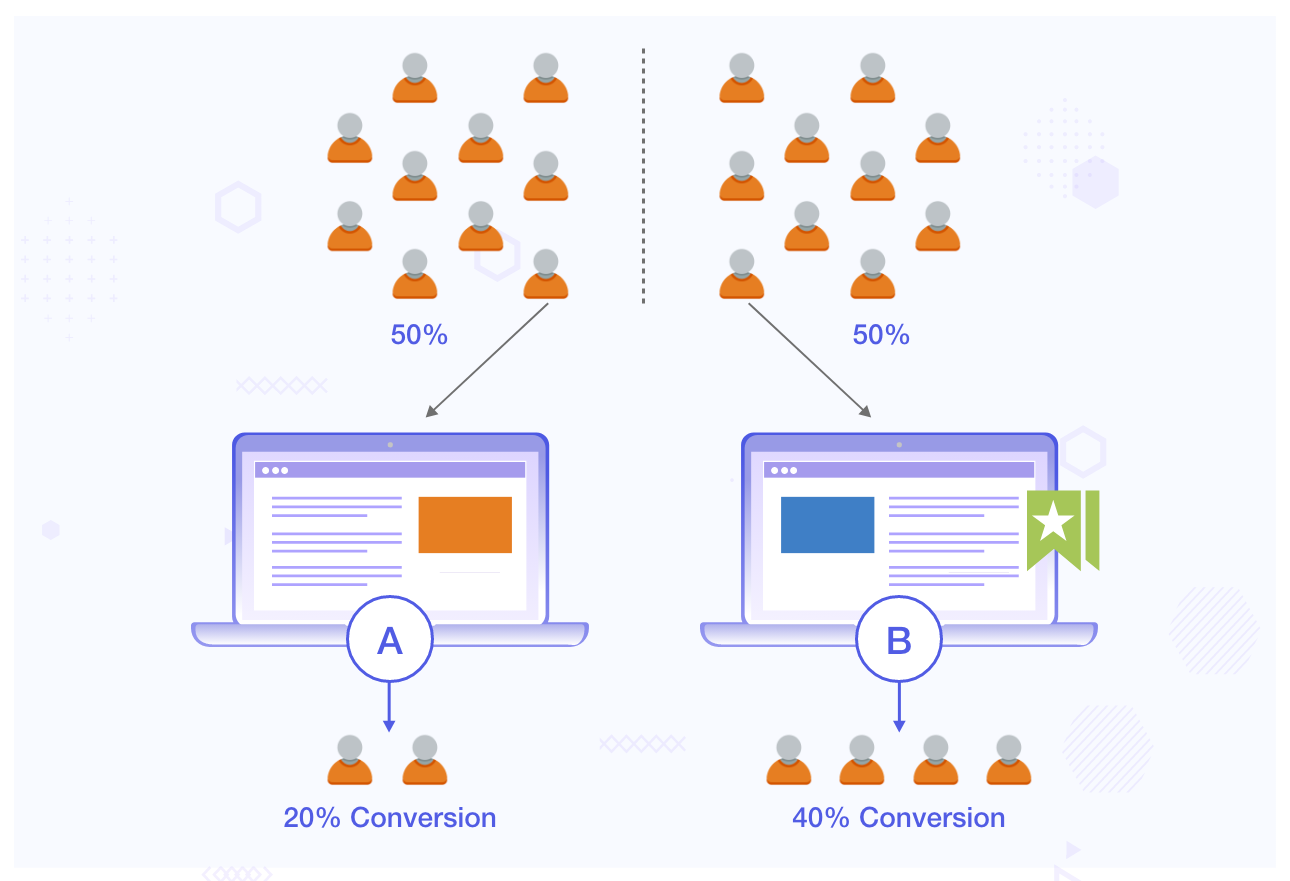

A/B testing is a process to compare and evaluate two different versions of an application against each other to decide which one performs better. This is also known as split testing. Different versions are shown to different sets of users and statistical analysis is performed by collecting different metrics that help decide which version performs better.

One of the main reasons why A/B testing is popular is because it allows teams to make changes to their product based on data rather than their opinion. The team can have a vision of a product, however, it’s the user feedback that helps shape the future of a product. Based on such feedback, the product can be improved over time.

This methodology is quite popular amongst marketers to gauge the performance of a landing page and applications. Using A/B testing, they are not only able to make design decisions but also understand the user experience that affects the performance of their applications. It might be hard for many of us to believe that a simple change in the color of a button can lead to a 21% increase in conversions.

Data-Driven Progressive Delivery using A/B Testing

In the case of progressive delivery, you have two different deployments on which you run tests that help you decide which version is better among the two. We can launch multiple long-running experiments on various versions of our applications before deciding which one to proceed with.

The tests that you run and the metrics that you collect are entirely dependent on your use case. You can run tests on the application version themselves or use external metrics to decide on the final version. It’s common for teams to use metrics like latency, click-through rate, and bounce rates to choose the version that gets promoted to production.

Using A/B testing in progressive delivery not only makes your process more resilient and swift but also helps you decide the best experience for your users based on data.

Let us see how we can use Argo Rollouts for A/B Testing in Progressive Delivery.

A/B testing with Argo Rollouts Experiments

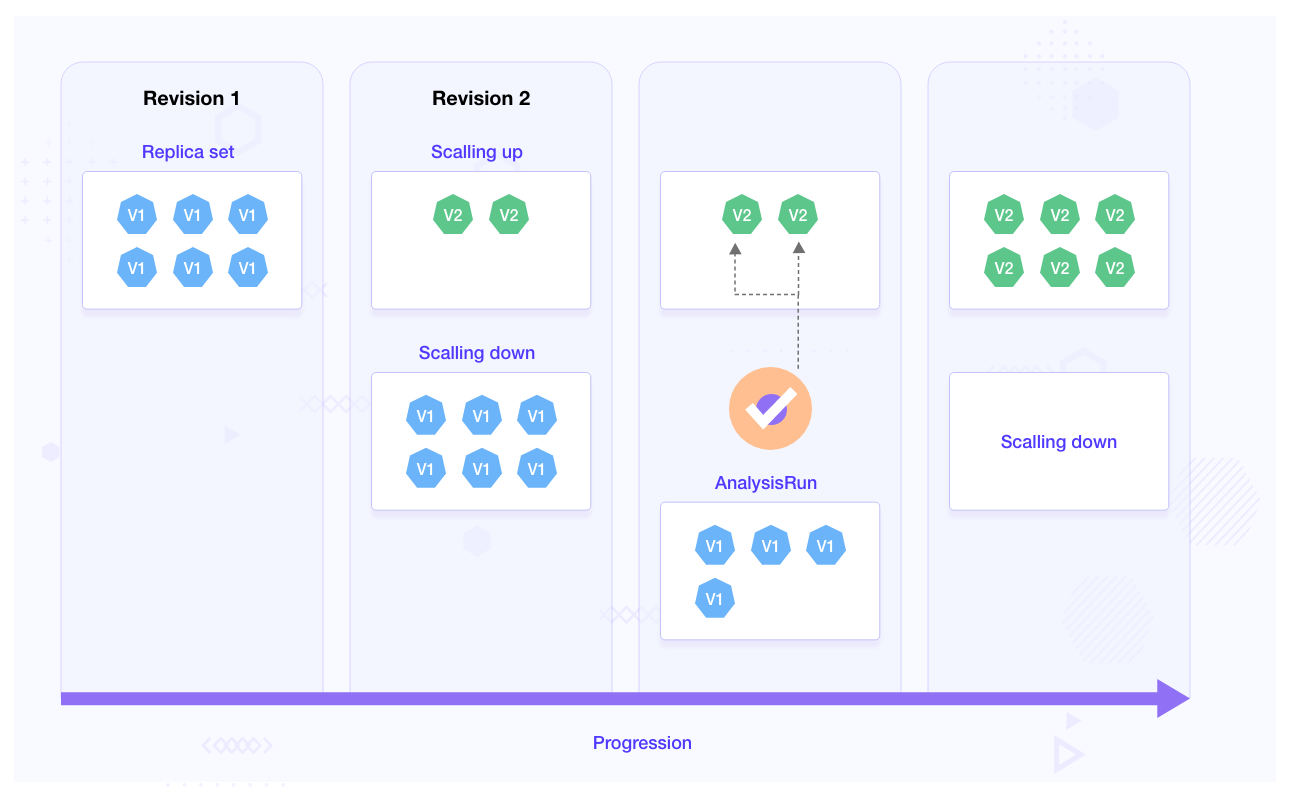

Argo Rollouts is a Kubernetes controller along with a set of CRDs that provide advanced deployment strategies like Canary, Blue Green, and Experiments to name a few. It creates ReplicaSets that are similar to a deployment. The controller manages the creation, and deletion of these ReplicaSets based on a spec.template defined in the rollout object.

Argo Rollouts Experiment is a Kubernetes CRD that allows us to perform A/B testing & Kayenta-style analysis of our application. There are three components that form an Experiment. Let’s understand what these are:

- Experiment: An experiment is intended to run on a ReplicaSet for analysis. Experiments can run for a predefined duration or indefinitely until stopped. If the duration is not specified it will run indefinitely until stopped or until all analysis marked

requiredForCompletionhave been completed. Internally it also references an AnalysisTemplate. This is used to create a baseline and canary deployment in parallel, and compare the metrics generated by both to decide on the next steps. - AnalysisTemplate: This template defines how to perform an analysis. It includes details like the frequency, duration, and success/failure values that will be used to determine whether an experiment is successful or failed.

- AnalysisRun: These are jobs that run based on the details provided in AnalysisTemplate. They are categorized as successful, failed, or inconclusive based on which the rollout’s update is decided. Hence, only if an AnalysisRun is successful, the rollout will proceed.

Experiments can be run standalone, as part of rollouts, or along with traffic routing. In this post, we’ll discuss how to use Argo Rollouts experiment with a canary rollout.

Below is how an Argo Rollouts Experiment works with canary rollout:

- ReplicaSets are created and once they reach full availability, an experiment starts & soon moves to run state.

- When an experiment is running, it starts an AnalysisRun based on the details provided in the AnalysisTemplate.

- If a duration is specified, the experiment will run for that duration before completing the experiment.

- If an AnalysisRun is successful, the experiment is successful and the rollout will proceed, if it fails, the rollout will not happen.

- Once the AnalysisRun is complete & the experiment is over, the ReplicaSets will be scaled to zero.

Argo Rollouts Experiment with canary deployment

Having gone through A/B Testing and Argo Rollouts experiment, it’s time to apply our learnings. We’ll use Argo Rollouts experiment with a canary deployment strategy and understand how experiments help us perform A/B testing and automatically decide the rollout progress status.

Use case

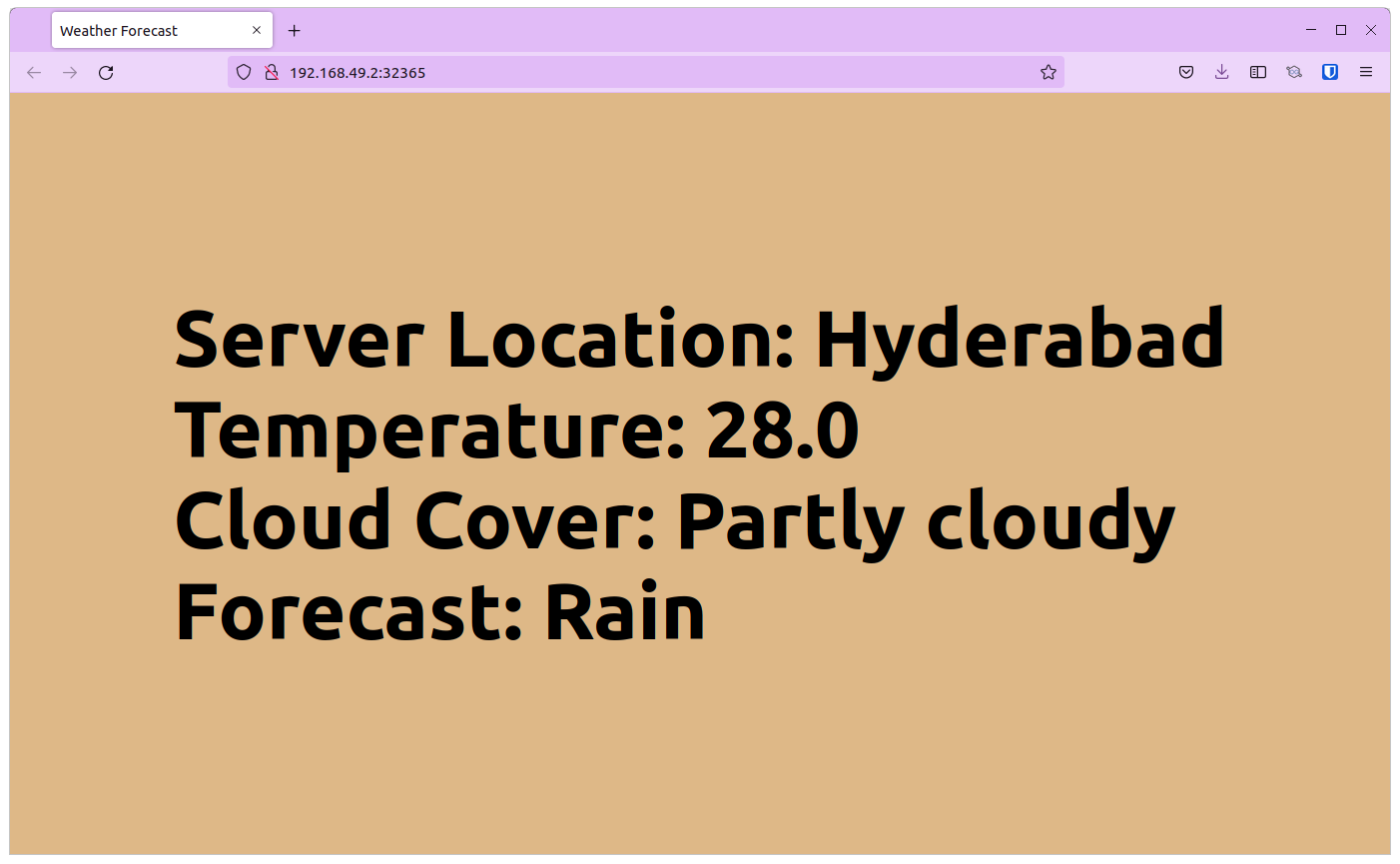

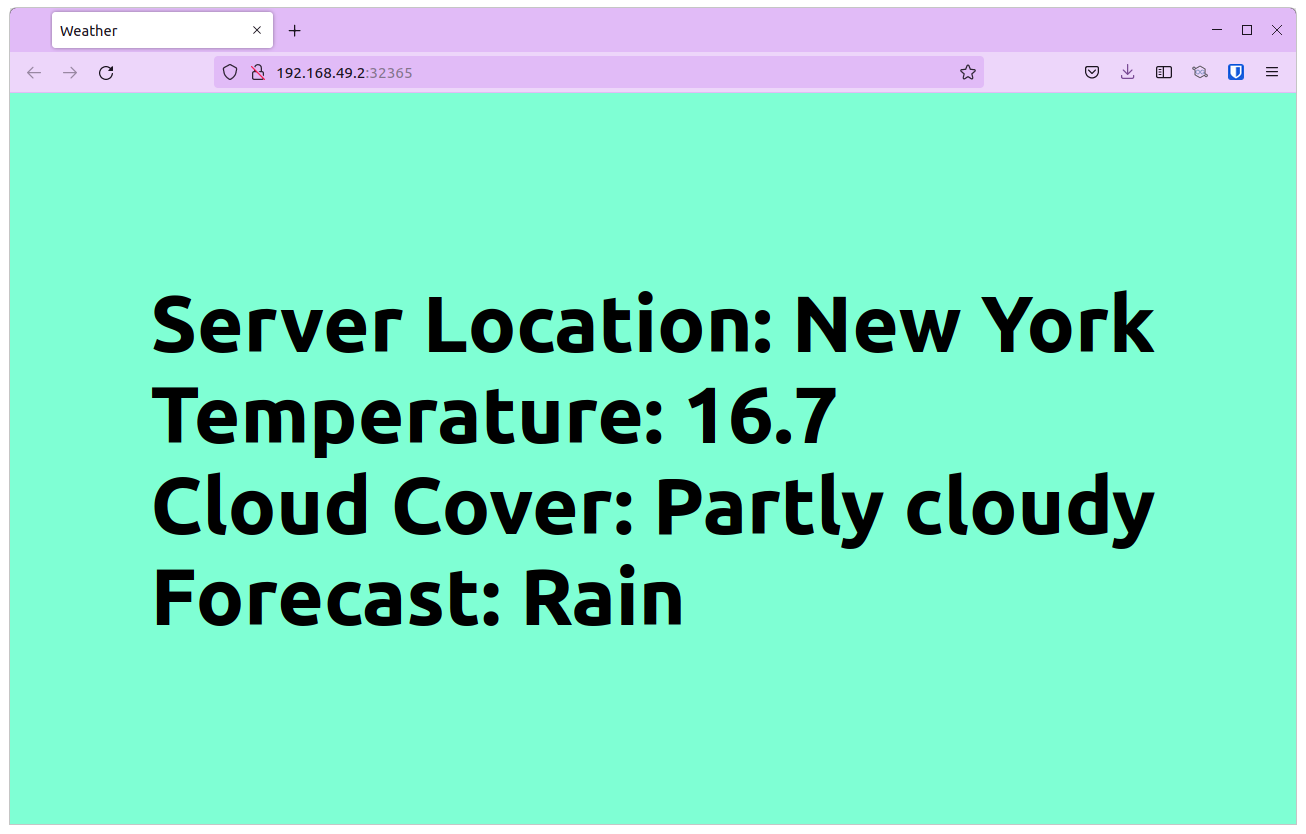

We’ll be using the familiar weather application that you might have seen in our Blue-Green Deployment using Argo Rollouts as well as Progressive Delivery using service mesh blog posts. There are two versions of the weather app that will be rolled out using a canary deployment strategy.

We will run an experiment within the canary strategy. The experiment references an AnalysisTemplate that basically gets a response from an API. Based on the response, the experiment will be marked as pass or fail. If the experiment succeeds, the newer version of the application will be rolled out, it will not otherwise.

Having understood the use case, let’s get into the code:

Implementation of Argo Rollouts Experiment

To begin with, you need a Kubernetes cluster. You can either have it running on your local system or on a managed cloud provider. You then connect to this cluster by configuring your terminal to access this cluster. For this example, we are using Minikube. If you are also using Minikube, make sure to enable the ingress addon.

The next step is to clone the repository that has this example:

git clone [https://github.com/infracloudio/ArgoRollouts-ABTesting-WeatherExample](https://github.com/infracloudio/ArgoRollouts-ABTesting-WeatherExample)

Setup Argo Rollouts Controller

Create a namespace to install the Argo Rollouts controller into:

kubectl create namespace argo-rollouts

Install the latest version of Argo Rollouts controller:

kubectl apply -n argo-rollouts -f [https://github.com/argoproj/argo-rollouts/releases/latest/download/install.yaml](https://github.com/argoproj/argo-rollouts/releases/latest/download/install.yaml)

Ensure that all the components and pods for argo rollouts are in the running state. You can check it by running the following command:

kubectl get all -n argo-rollouts

One of the easiest and recommended ways to interact with the Argo Rollouts controller is using the kubectl argo rollout plugin. You can install it by executing the following commands:

curl -LO https://github.com/argoproj/argo-rollouts/releases/latest/download/kubectl-argo-rollouts-linux-amd64

chmod +x ./kubectl-argo-rollouts-linux-amd64

sudo mv ./kubectl-argo-rollouts-linux-amd64 /usr/local/bin/kubectl-argo-rollouts

kubectl argo rollouts version

At this point, we have successfully configured the Argo Rollouts controller on our Kubernetes cluster.

Argo Rollouts Experiment with Canary Deployment

As mentioned earlier, we’re going to use Argo Rollouts experiment along with Canary deployment. For that, we will create a canary rollout.yaml along with an AnalysisTemplate.

Rollout spec:

apiVersion: argoproj.io/v1alpha1

kind: Rollout

metadata:

name: rollout-experiment

spec:

replicas: 2

strategy:

canary:

steps:

- setWeight: 20

- pause: {duration: 10}

# The second step is the experiment which starts a single canary pod

- experiment:

duration: 5m

templates:

- name: canary

specRef: canary

# This experiment performs its own analysis by referencing an AnalysisTemplates

# The success or failure of these runs will progress or abort the rollout respectively.

analyses:

- name: canary-experiment

templateName: webmetric

- setWeight: 40

- pause: {duration: 10}

- setWeight: 60

- pause: {duration: 10}

- setWeight: 80

- pause: {duration: 10}

revisionHistoryLimit: 2

selector:

matchLabels:

app: rollout-experiment

template:

metadata:

labels:

app: rollout-experiment

spec:

containers:

- name: rollouts-demo

image: docker.io/atulinfracloud/weathersample:v1

imagePullPolicy: Always

ports:

- containerPort: 5000

---

apiVersion: v1

kind: Service

metadata:

name: rollout-weather-svc

spec:

selector:

app: rollout-experiment

ports:

- protocol: "TCP"

port: 80

targetPort: 5000

type: NodePort

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: rollout-ingress

annotations:

kubernetes.io/ingress.class: nginx

spec:

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: rollout-weather-svc

port:

number: 80

In the above rollout.yaml we create a canary strategy and provide an experiment as a step. The experiment is scheduled to run for 5mins irrespective of the result. It creates a canary ReplicaSet and references an AnalysisTemplate object.

AnalysisTemplate:

apiVersion: argoproj.io/v1alpha1

kind: AnalysisTemplate

metadata:

name: webmetric

spec:

metrics:

- name: webmetric

successCondition: result.completed == true # Change this value to `result.completed == false` to fail the test

provider:

web:

url: "https://jsonplaceholder.typicode.com/todos/4" # URL returns a JSON object with completed being one of the values, it returns true by default

timeoutSeconds: 10

The AnalysisTemplate uses a webmetric. It calls an API that returns a JSON object. The object has multiple variables and one of them is completed. Below is a sample JSON object:

{

"userId": 1,

"id": 4,

"title": "et porro tempora",

"completed": true

}

The template specifies the successCondition as result.completed == true. This means that the AnalysisRun will be successful only when the value of completed is true. i.e. the rollout will progress with the update only if this AnalysisRun is successful.

Go ahead and deploy the file to create the rollout and AnalysisTemplate. For easy deployment, we’ve combined the services and rollout mentioned above in a single YAML file: rollout.yaml. We will first deploy the AnalysisTemplate followed by the rollout.

$ kubectl apply -f analysis.yaml

analysistemplate.argoproj.io/webmetric created

Check the status of the AnalysisTemplate created using the following command:

$ kubectl describe AnalysisTemplate

Name: webmetric

Namespace: default

Labels: <none>

Annotations: <none>

API Version: argoproj.io/v1alpha1

Kind: AnalysisTemplate

Metadata:

Creation Timestamp: 2022-09-26T07:03:32Z

Generation: 1

Managed Fields:

API Version: argoproj.io/v1alpha1

Fields Type: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.:

f:kubectl.kubernetes.io/last-applied-configuration:

f:spec:

.:

f:metrics:

Manager: kubectl-client-side-apply

Operation: Update

Time: 2022-09-26T07:03:32Z

Resource Version: 8231

UID: 43717fbe-8126-4564-87a1-6c45b1d45ba8

Spec:

Metrics:

Name: webmetric

Provider:

Web:

Timeout Seconds: 10

URL: https://jsonplaceholder.typicode.com/todos/1

Success Condition: result.completed == true

Events: <none>

Once the AnalysisTemplate is deployed, let’s deploy the rollout using the following command:

kubectl apply -f rollout.yaml

rollout.argoproj.io/rollout-experiment created

service/weather-test-app-hyd created

service/weather-test-app-ny created

You can verify the successful deployment by running the following command:

$ kubectl argo rollouts get rollout rollout-experiment

Name: rollout-experiment

Namespace: default

Status: ✔ Healthy

Strategy: Canary

Step: 9/9

SetWeight: 100

ActualWeight: 100

Images: docker.io/atulinfracloud/weathersample:v1 (stable)

Replicas:

Desired: 2

Current: 2

Updated: 2

Ready: 2

Available: 2

NAME KIND STATUS AGE INFO

⟳ rollout-experiment Rollout ✔ Healthy 116s

└──# revision:1

└──⧉ rollout-experiment-9fb48bdbf ReplicaSet ✔ Healthy 116s stable

├──□ rollout-experiment-9fb48bdbf-czvsh Pod ✔ Running 116s ready:1/1

└──□ rollout-experiment-9fb48bdbf-qnk2s Pod ✔ Running 116s ready:1/1

At this point, our application is running with v1 of the weather app. In order to access the application, you need to do the following:

- In a new terminal run

minikube tunnel; this will create a tunnel so that we can access the ingress from our local host. - In another terminal, expose the

rollout-weather-svcusingminikube service rollout-weather-svc --url. - Access the application using the URL you get from the above step.

Let us now update the image with a newer one using the canary strategy.

kubectl argo rollouts set image rollout-experiment rollouts-demo=docker.io/atulinfracloud/weathersample:v2

The moment we run this command, the following activities will start simultaneously:

- The canary deployment will start the rollout process.

- An experiment will start and trigger the AnalysisTemplate provided, it will be in

runningstate for 5 mins as specified in the template. - An AnalysisRun will start based on the AnalysisTemplate and will run until the test is over.

- The AnalysisRun will end with success, and the experiment, however, will run for 5 mins.

- After 5 mins, the experiment will be successful and the rollout will proceed and deploy the v2 of the weather app.

In the following sections, we will see each of the above-mentioned activities.

Status of Experiment

$ kubectl get Experiments

NAME STATUS AGE

rollout-experiment-698fdb45bf-2-2 Running 15s

Status of AnalysisRun

$ kubectl get AnalysisRun

NAME STATUS AGE

rollout-experiment-698fdb45bf-2-2-canary-experiment Successful 15s

Let us describe AnalysisRun and see the events that took place while the AnalysisRun was executing.

$ kubectl describe AnalysisRun rollout-experiment-698fdb45bf-2-2-canary-experiment

...

Spec:

Metrics:

Name: webmetric

Provider:

Web:

Timeout Seconds: 10

URL: https://jsonplaceholder.typicode.com/todos/4

Success Condition: result.completed == true

Status:

Dry Run Summary:

Metric Results:

Count: 1

Measurements:

Finished At: 2022-09-26T07:44:54Z

Phase: Successful

Started At: 2022-09-26T07:44:49Z

Value: {"completed":true,"id":4,"title":"et porro tempora","userId":1}

Name: webmetric

Phase: Successful

Successful: 1

Phase: Successful

Run Summary:

Count: 1

Successful: 1

Started At: 2022-09-26T07:44:54Z

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal MetricSuccessful 47s rollouts-controller Metric 'webmetric' Completed. Result: Successful

Normal AnalysisRunSuccessful 47s rollouts-controller Analysis Completed. Result: Successful

Let us now see the status of the Experiment after the AnalysisRun is successful:

$ kubectl describe Experiment rollout-experiment-698fdb45bf-2-2

...

Status:

Analysis Runs:

Analysis Run: rollout-experiment-698fdb45bf-2-2-canary-experiment

Name: canary-experiment

Phase: Successful

Available At: 2022-09-26T07:44:49Z

Conditions:

Last Transition Time: 2022-09-26T07:44:35Z

Last Update Time: 2022-09-26T07:44:49Z

Message: Experiment "rollout-experiment-698fdb45bf-2-2" is running.

Reason: NewReplicaSetAvailable

Status: True

Type: Progressing

Phase: Running

Template Statuses:

Available Replicas: 1

Last Transition Time: 2022-09-26T07:44:49Z

Name: canary

Ready Replicas: 1

Replicas: 1

Status: Running

Updated Replicas: 1

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal TemplateProgressing 48s rollouts-controller Template 'canary' transitioned from -> Progressing

Normal ExperimentPending 48s rollouts-controller Experiment transitioned from -> Pending

Normal ScalingReplicaSet 48s rollouts-controller Scaled up ReplicaSet rollout-experiment-698fdb45bf-2-2-canary from 0 to 1

Normal TemplateRunning 34s rollouts-controller Template 'canary' transitioned from Progressing -> Running

Normal ExperimentRunning 34s rollouts-controller Experiment transitioned from Pending -> Running

Normal AnalysisRunPending 34s rollouts-controller AnalysisRun 'canary-experiment' transitioned from -> Pending

Normal AnalysisRunSuccessful 29s rollouts-controller AnalysisRun 'canary-experiment' transitioned from -> Successful

Argo Rollouts comes with its own GUI as well that you can access with the below command:

kubectl argo rollouts dashboard

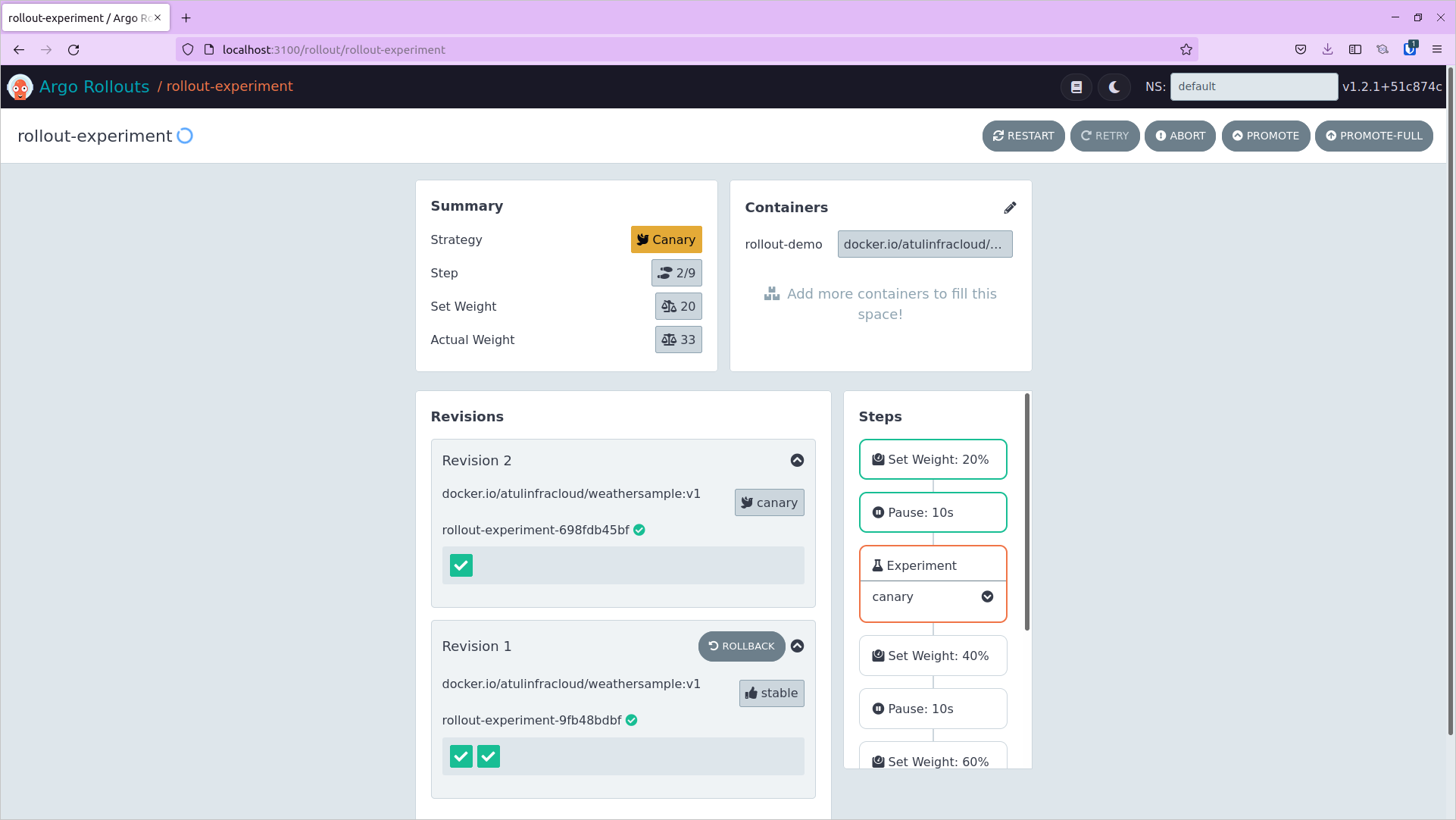

You can access the Argo Rollouts console by visiting http://localhost:3100 on your browser. If you look at the Argo Rollouts dashboard while the experiment is running, you should see something like this:

After the experiment duration has elapsed, the experiment will pass and the rollout will proceed to the next steps and eventually deploy the newer version of the application.

In real-life scenarios, the tests that you run can include metrics from monitoring tools like Grafana, and Prometheus, and you’ll be able to progress a rollout based on these values.

To test the failure scenario, change the successCondition in analysis.yaml to successCondition: result.completed == false. Since the result from the API is always true, this will fail the AnalysisRun and the experiment will fail. Since the experiment fails, the rollout will not proceed to the next steps and the newer version will not be deployed.

After changing the value, pass a newer image to the rollout and notice the AnalysisRun object.

$ kubectl describe AnalysisRun rollout-experiment-fd88675b8-5-2-canary-experiment

...

Spec:

Metrics:

Name: webmetric

Provider:

Web:

Timeout Seconds: 10

URL: https://jsonplaceholder.typicode.com/todos/1

Success Condition: result.completed == false

Status:

Dry Run Summary:

Message: Metric "webmetric" assessed Failed due to failed (1) > failureLimit (0)

Metric Results:

Count: 1

Failed: 1

Measurements:

Finished At: 2022-09-26T08:01:04Z

Phase: Failed

Started At: 2022-09-26T08:00:55Z

Value: {"completed":true,"id":4,"title":"et porro tempora","userId":1}

Name: webmetric

Phase: Failed

Phase: Failed

Run Summary:

Count: 1

Failed: 1

Started At: 2022-09-26T08:01:04Z

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning MetricFailed 32s rollouts-controller Metric 'webmetric' Completed. Result: Failed

Warning AnalysisRunFailed 32s rollouts-controller Analysis Completed. Result: Failed

That was a short demo of how you can use Argo Rollouts for A/B Testing in progressive delivery using Experiments. This was a simple example to demonstrate the capabilities of Argo Rollouts experiments for A/B testing.

Benefits of A/B Testing in Progressive Delivery

A/B testing comes with a lot of benefits itself. However, by using it with canary deployments, we are able to take things a notch further. With this approach, we are able to:

- Run experiments for a specific duration or indefinitely and the rollout will not progress until then.

- Automatically progress a rollout based on the metrics and criteria we define.

- Faster Disaster Recovery in case the experiment fails, the rollout will halt.

Summary

Progressive delivery along with A/B Testing enables teams to perform custom A/B tests and deploy applications faster. In this blog post, we saw how you can use Argo Rollouts’ Experiment feature to perform A/B testing with canary deployment. We learned about AnalysisTemplates and AnalysisRuns and how their outputs affect the rollout.

That’s about it for this blog post, feel free to reach out to Atul for any suggestions or queries regarding this blog post.

And if you’re looking to modernize your application deployment process, consult with our progressive delivery experts.

References:

Stay updated with latest in AI and Cloud Native tech

We hate 😖 spam as much as you do! You're in a safe company.

Only delivering solid AI & cloud native content.