About the webinar

Traditional infrastructure works well for standard applications, but AI apps comes with unique challenges like managing large datasets, high computational needs, and real-time processing. As AI workloads grow more complex, legacy infrastructure struggles to keep up. Modern AI systems call for innovative solutions.

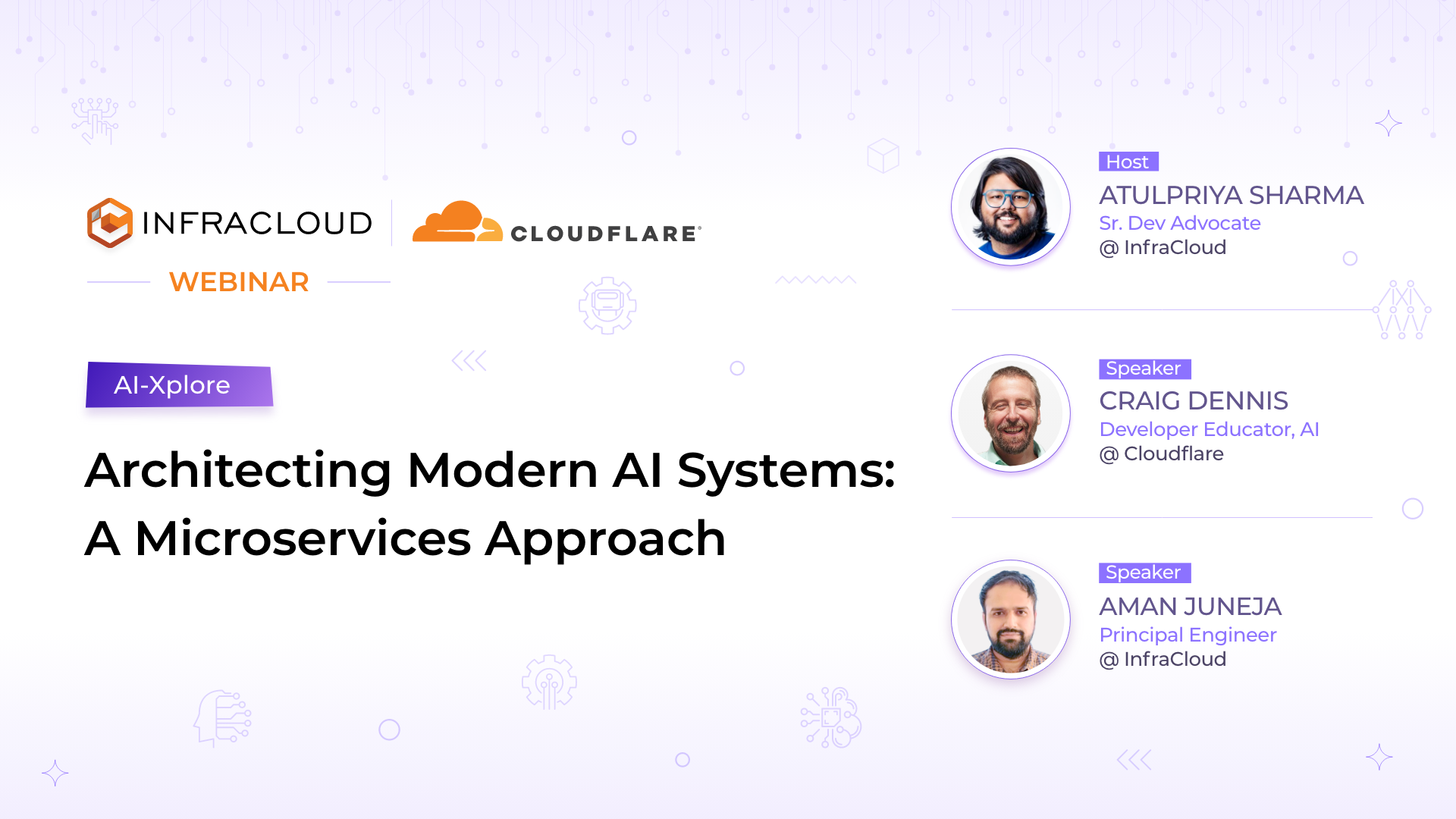

In this webinar on architecting composable AI systems, you’ll learn what sets AI systems apart from traditional infrastructure practices. Discover the modern technologies required to support AI workloads effectively. We’ll dive into the key components of modern AI systems and explore how your design decisions can directly influence business outcomes. We will guide you through essential architectural patterns, demonstrating how microservices can be effectively adapted for AI workloads. Subject matter experts will share the most important factors and best practices for designing AI systems, covering microservices patterns, infrastructure needs, and performance optimization.

Plus, Craig (Developer Educator, AI) from Cloudlfare joined us to share his insights with real-world use cases and solutions. Join us for a practical, insightful session and get answers to your questions during the live Q&A.

What to expect

- Traditional Hosting vs AI Systems: Learn how AI infrastructure differs from traditional hosting, with a focus on specialized resources.

- Key Components of Modern AI Systems: Understand the core elements that make up modern AI systems.

- Business Implications of AI System Design Choices: Discover how your AI system design can impact costs, time-to-market, and long-term maintenance.

- Microservices Patterns for AI Systems: Explore how microservices are used to scale and manage AI workloads effectively.

- Infrastructure Requirements: Dive into the specific computing, storage, and network needs of AI systems.

- Performance Optimization: Learn strategies for optimizing AI performance, including resource allocation and load balancing.

- Practical Implementation Showcase: Watch a live demo with real-world use cases.

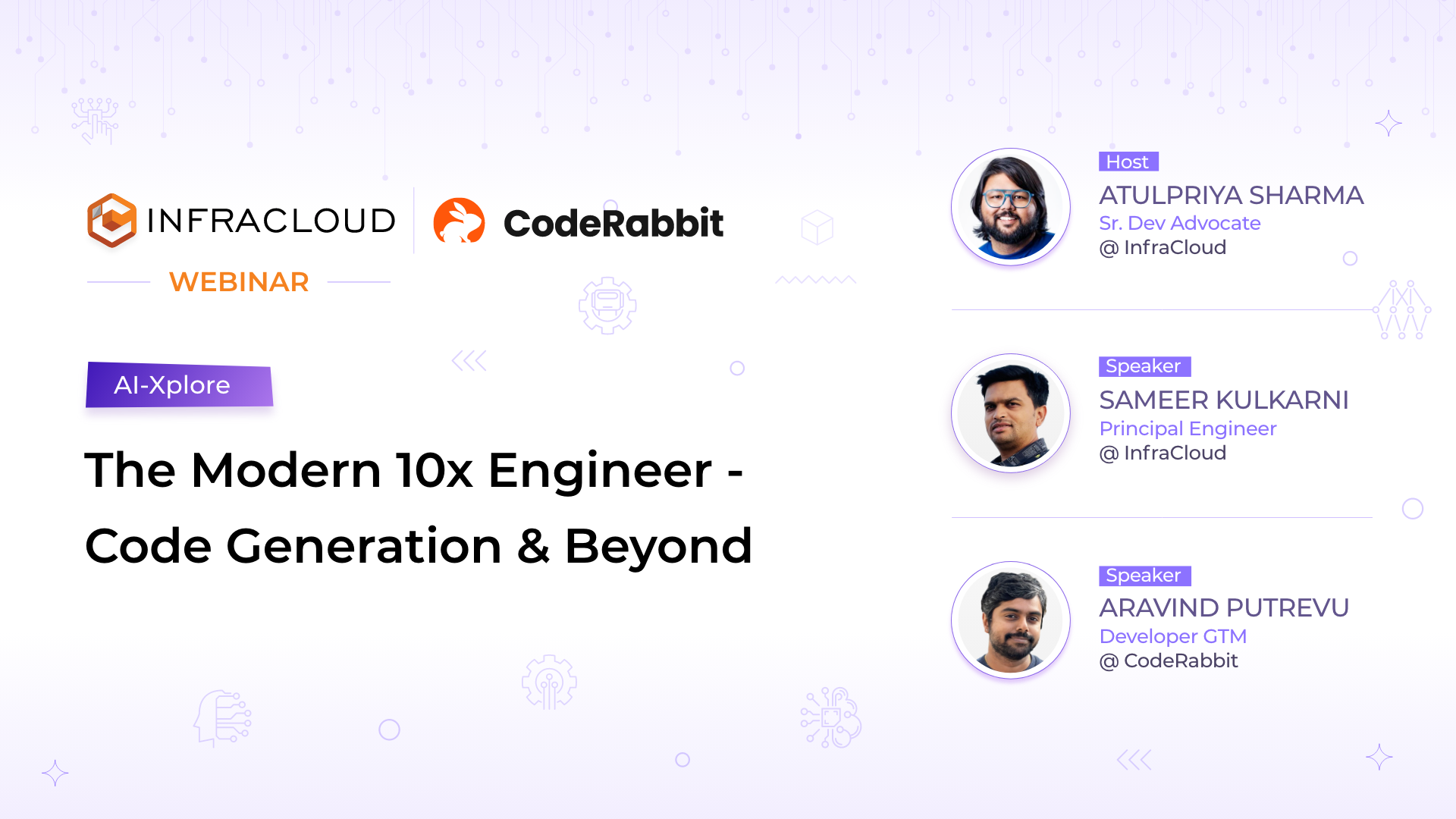

Meet the Speakers

Manual tester turned developer advocate. He talks about Cloud Native, Kubernetes, AI & MLOps to help other developers and organizations adopt cloud native. He is also a CNCF Ambassador and the organizer of CNCF Hyderabad.

Craig is a software teacher, backend developer, and self-taught polyglot. As a developer, he likes to bridge the gap between ideas and implementation to ensure that both programmers and product managers are speaking the same language.

Aman specializes in AI Cloud solutions and cloud native design, bringing extensive expertise in containerization, microservices, and serverless computing. His current focus lies in exploring AI Cloud technologies and developing AI applications using cloud native architectures.

Need a clear starting point to build your own AI lab?

Leverage our AI stack charts to empower your team with faster, more efficient AI service deployment on Kubernetes.