From Docker “the standard” to Open Container Specification

Container is an exciting piece of technology as it is not only a unit of deployment but also of virtualization. With container we are moving a level up from VMs but container is not a new technology. It has always there in the form of LXC, solaris zones etc. But Docker created an user friendly interface and unlocked the power of containers.

Docker is not the only container runtime, there are other implementations for container runtime too like Rocket from CoreOS. Along with that there are also minimalist, Docker focused OSs like CoreOS and RancherOS. What makes it even more interesting is launch of Open Container Initiative which announced its technical governance structure at end of 2015.

From Docker being the only container runtime to an industry consortium coming together to define how container image format and runtime will look like, is very interesting and important development especially from technical point of view. In this blog we will touch upon systemd - Docker incompatibility and how this eventually led to CoreOS coming up with Rocket while RancherOS creating a Docker focused OS.

systemd

In traditional Linux systems - Init system is responsible for spawning all the user processes during bootup and bring up the user space. init is the first process to be spawned and it becomes parent of any orphaned process. If you look at any linux distro, with SysV init system you will find init as PID 1

[root@srv ~]# ps ax

PID TTY STAT TIME COMMAND

1 ? Ss 0:01 /sbin/init

But if you look at latest distro e.g. Ubuntu 15.04 onward which uses systemd you will see that systemd is PID 1

[root@srv ~]# ps ax

$ ps ax

PID TTY STAT TIME COMMAND

1 ? Ss 0:01 /usr/lib/systemd/systemd --switched-root --system --deserialize 21

2 ? S 0:00 [kthreadd]

3 ? S 0:00 [ksoftirqd/0]

systemd is an init system was introduced to replace sysvinit. There are two big problems with sysvinit which systemd solves:

1. SysV init system is slow as it serializes spawning of processes during boot. So unless a previous process has been spawned completely - others have to wait.

2. init is responsible for bringing up userspace so logically it should be able to monitor all the processes. But sysvinit can not do so in case of "double forking". Daemons are usually created using double forking to avoid zombie processes. A daemon is created and the parent of daemon exits and init becomes daemon’s parent. So say process A does a double fork and creates process B. init does not understand the relation between process A and process B. And since process B was double forked and intermediate parent is dead we cannot terminate process B by terminating process A.

systemd reduces boot time by parallelizing the daemons creation. systemd improves upon sysvinit by leveraging the fact that daemons are actually waiting on other daemon’s sockets to serve requests. So systemd creates all the sockets in first step and run all the daemons in next. And thus kernel sockets buffer will ensure synchronisation.

systemd by using cgroups is able to track all the processes. Even if a process double forks it cannot escape cgroup hierarchy and thus is not lost. In last couple of years systemd has become kind of standard as all the Linux distributions have adopted it.

With a good understanding of systemd, let’s take a look why Docker and systemd does not play well together.

Docker & systemd

Docker was written when systemd was yet to gain traction. And Docker containers needed a daemon which will

* setup resources

* remote Docker APIs

* track state of containers

* cleanup containers

As you can see Docker daemon is itself a pseudo init system. The incompatibility between Docker and systemd arises because both try to control the cgroups. systemd was adopted because it is able to monitor all the processes spawned and solves the zombie reaping problem with cgroups. But the way Docker runs container breaks this semantic.

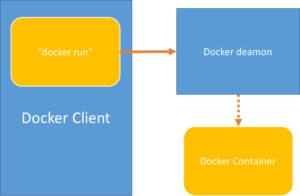

[caption id=”attachment_29” align=”alignnone” width=”300”] Docker - SystemD[/caption]

Docker - SystemD[/caption]

With Docker whenever a Docker client runs “docker run” command it makes a RPC call to Docker daemon and new container is launched. And this new container is created in different cgroup than the Docker client. And thus it can be no longer tracked by systemd. Suppose you kill Docker client, the container will still be in running state but systemd will show that unit has stopped.

Now what if you restart systemd unit, you will get two containers. One solution could be to do “if container exists; then docker rm -f container; fi; docker run …“ but systemd unit files doesn’t support such conditional statements. And therefore it is nearly impossible for Docker and systemd to work together in harmony.

CoreOS

CoreOS came with their Docker focussed OS so that it becomes the goto distribution for running Docker. The approach they took was to make sure systemd and Docker work together. All the CoreOS services work with systemd e.g. Fleet, the container scheduler interfaces with systemd. So even though CoreOS is a Docker container focused distribution it actually built around systemd and the systemd unit files run Docker. It was conscious decision on their part to make CoreOS integrated with systemd.

Example running containers with systemd unit files

[Unit]

Description=My Service

Requires=docker.service

After=docker.service

[Service]

ExecStart=/usr/bin/docker run busybox /bin/sh -c "while true; do echo Hello World; sleep 1; done"

[Install]

WantedBy=multi-user.target

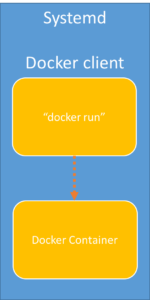

But then Why CoreOS went for systemd?

systemd is a modern init system, well accepted and you could build other services around it e.g. Fleet. Also sysadmins are well versed with managing services with systemd given its acceptability. CoreOS founders were well aware of this problem and they are of the opinion that Docker daemon should not own fork and it is best left to systemd. And they proposed to run Docker to run in standalone and systemd will supervise it. So their approach has been to make changes to Docker so that it works well with systemd. This has not gone well with Docker team and they didn’t agree to this changes which is fundamental to docker.

[caption id=”attachment_28” align=”alignnone” width=”150”] Standalone mode for Docker[/caption]

Standalone mode for Docker[/caption]

This led to CoreOS calling “Docker fundamentally flawed” and announcing Rocket, a new container runtime. Rocket works in standalone mode. Rocket does not have a daemon and when you do a “rkt run” it forks from the process which does “rkt run” and therefore is tracked well by systemd init process.

What about RancherOS?

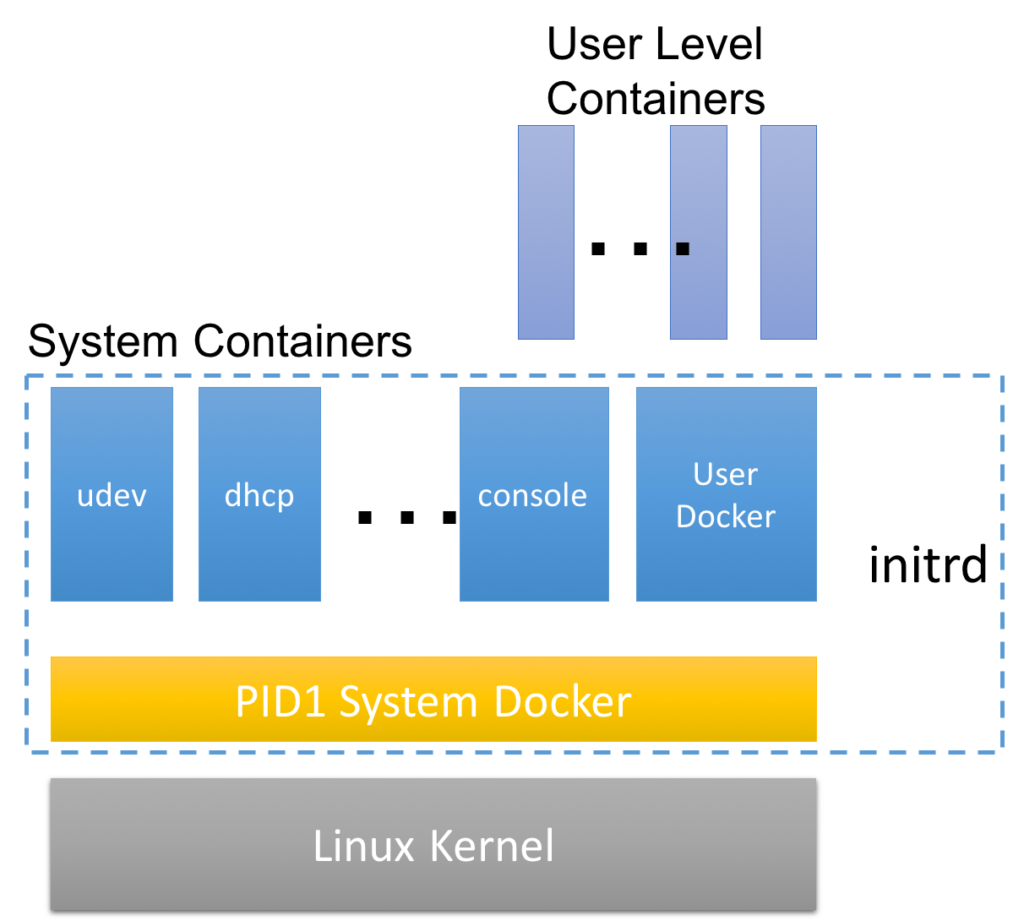

Rancher is of the opinion that Docker is a new paradigm all together and it changing the core of Docker could affect Docker’s vision. And by changing Docker to work with systemd is going backwards. This led to Rancher coming up with native Docker support where Docker containers are first class citizens.

RancherOS is minimalist OS which always runs the latest Docker. System Docker runs the Docker daemon as PID 1. And all the system services are run as Docker container. User Docker is a separate container which runs Docker daemon and it manages all the user containers. RancherOS has solved the problem of systemd/Docker incompatibilities by going all in for Docker daemon as it replaces init system.

Open Container Initiative

When CoreOS announced Rocket, which is an alternative to Docker, they started working on container specification - appc. The expectation is to have a container specification which is well accepted by everyone and which can have multiple implementations of it.

The different approaches taken up by Docker and CoreOS on how container should be run resulted in different container implementation and possibility that Docker may not be the standard. Therefore OCI was proposed to make sure portability and convertibility of containers across different implementations.

The primary goals for OCI are listed below and explained in this post

* Users should be able to package their application once and have it work with any container runtime (like Docker, rkt, Kurma, or Jetpack)

* The standard should fulfill the requirements of the most rigorous security and production environments

* The standard should be vendor neutral and developed in the open

appc defines several independent but composable aspects involved in running application containers, including an image format, runtime environment, and discovery mechanism for application containers. appc was the first proposal to become a standard for container spec.

Just after DockerCon 2015, all the stakeholders like Docker, CoreOS, Rancher Labs, Google, Microsoft etc. came together and came up with Open Container Specification. Open Container Specification is the successor of appc, which was the first attempt at standardizing container specifications. Appc team joined the OCI and decided to work together to bring best of both ideas into OCI.

Docker also contributed to OCI its runC project which is universal container runtime, it is the heart of the Docker and abstracts the details of the host for portability. runC follows the standards laid down by Open Container Specification.

In just three years of existence of Docker we have seen, Docker becoming a standard in containerisation due to its user friendliness. But the systemd & Docker daemon incompatibilities and it’s approaches to solve this led to CoreOs and Docker splitting ways. Rancher took a very interesting approach and built a new OS replacing init system with Docker containers.

And finally with competing container runtimes and formats lead to setting up of industry consortium to standardise containers which agreed on a common Open Container Specification. This is a huge development and efforts of consortium together will go a long way in enabling innovation while standardizing specs.

Looking for help with your cloud native journey? do check out how we’re helping startups & enterprises with our cloud native consulting services and capabilities to achieve the cloud native transformation.

Stay updated with latest in AI and Cloud Native tech

We hate 😖 spam as much as you do! You're in a safe company.

Only delivering solid AI & cloud native content.