The MCP Gateway: Enabling Secure and Scalable Enterprise AI Integration

The Model Context Protocol (MCP) ecosystem has exploded with community-built servers that developers describe as tools they “can’t live without.” From web automation to documentation management, these specialized servers solve specific productivity challenges and have become integral to AI-powered workflows. Yet as teams scale from one or two MCP servers to dozens, they face a critical challenge that threatens to undermine the very productivity gains these tools provide.

Securing each MCP server individually leads to repetitive work and fragmented controls. As the number of servers grows, teams face mounting challenges: duplicating OAuth and RBAC setups, managing inconsistent access across tools, and dealing with inevitable configuration drift. What began as a productivity boost quickly turns into an operational burden.

In this blog post, we will cover how to solve these scaling challenges using an MCP Gateway as a centralized integration hub. We’ll walk through the technical implementation of consolidating multiple community MCP servers behind a single secure gateway, explore real-world productivity workflow, and examine advanced security features for enterprise deployments. By the end, you’ll have a production-ready gateway that transforms isolated tools into a cohesive AI productivity platform.

The scaling problem: when success becomes complexity

What happens when your carefully chosen MCP servers multiply from a manageable handful to dozens of critical tools? The answer isn’t just “more work”, it’s exponentially more complexity that can cripple the very productivity you’re trying to achieve.

Security fragmentation

Individual OAuth setups per server create multiple points of failure and inconsistent security postures. Each server maintains its own authentication state and session management, along with unique authorization policies and role definitions. Token validation and refresh logic vary between implementations, while security event logging and monitoring are fragmented across systems. The fragmentation makes it nearly impossible to implement organization-wide security policies and creates dangerous blind spots in security monitoring.

Operational overhead

The operational burden grows with each additional MCP server. Configuration management becomes a constant challenge as teams struggle to keep server configurations synchronized and up-to-date across different deployment environments. Monitoring transforms from a single dashboard into multiple monitoring endpoints with separate alerting configurations. Troubleshooting requires deep knowledge of each MCP server’s implementation details, while performance optimization demands individual tuning strategies for every server in your stack.

Developer experience issues

From a developer’s perspective, the challenges compound daily. Tool discovery becomes increasingly difficult without a central registry of available tools and capabilities. Integration complexity multiplies as each MCP server implements different connection patterns and authentication requirements. Multi-system debugging turns into a nightmare of tracing requests across multiple servers with different logging formats and debug interfaces. Documentation fragments across repositories, making it harder to understand how tools work together.

Solution: MCP gateway as a productivity control center

Rather than accepting the complexity as inevitable, there’s a better architectural approach, an MCP Gateway.

What is an MCP gateway?

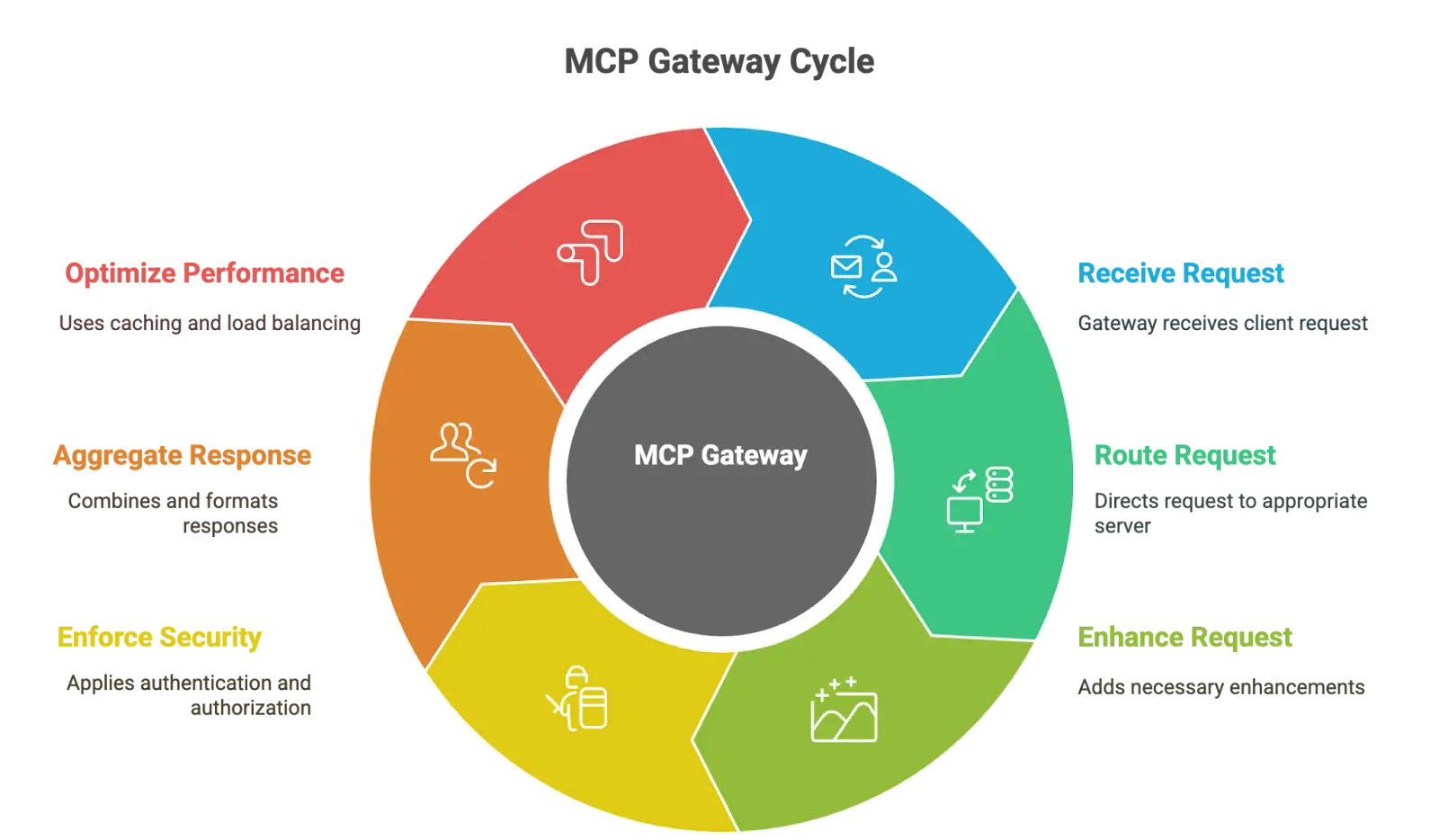

An MCP Gateway functions as a reverse proxy and orchestrator positioned between LLM clients and MCP servers. You can think of it as an intelligent traffic controller that routes requests and enhances them. The gateway abstracts the complexity of backend tool execution while providing a unified interface for clients. It handles:

- Request routing to appropriate backend servers

- Enforces authentication and authorization consistently

- Aggregates and formats responses

- Implements security policy enforcement

- Optimizes performance through caching and load balancing

Architectural principles

The gateway operates on three core principles that solve the scaling problems we’ve identified.

- Separation of concerns: It means the gateway offloads security, authentication, and routing responsibilities from individual tool servers, allowing them to focus purely on their core functionality.

- Unified endpoint principle: It ensures clients connect to a single gateway endpoint rather than managing connections to multiple servers, dramatically simplifying client implementation and configuration.

- Policy enforcement principle: It centralizes security policies, rate limits, and access controls so they’re applied consistently across all backend servers through the gateway layer.

This architecture simplifies MCP integration and management without reducing flexibility.

Benefits of MCP Gateway for development teams

The gateway enables unified tool discovery, so clients can list and use tools from many MCP servers without knowing where they’re hosted. It removes the need for hardcoded routing logic by determining the best server for each request. Centralized authentication and policy controls reduce duplication and ensure consistency across tools. Performance improves through shared caching, rate limits, and automatic load distribution, all handled at the gateway layer.

In short, the gateway model replaces fragmented infrastructure with a manageable, extensible entry point for AI-powered productivity workflows.

Hands-on: building your productivity-focused MCP gateway

Several MCP gateway implementations are now available, all with open source projects or components. Choosing the right one depends on your technical needs, security requirements, and deployment environment. Here are a few notable options:

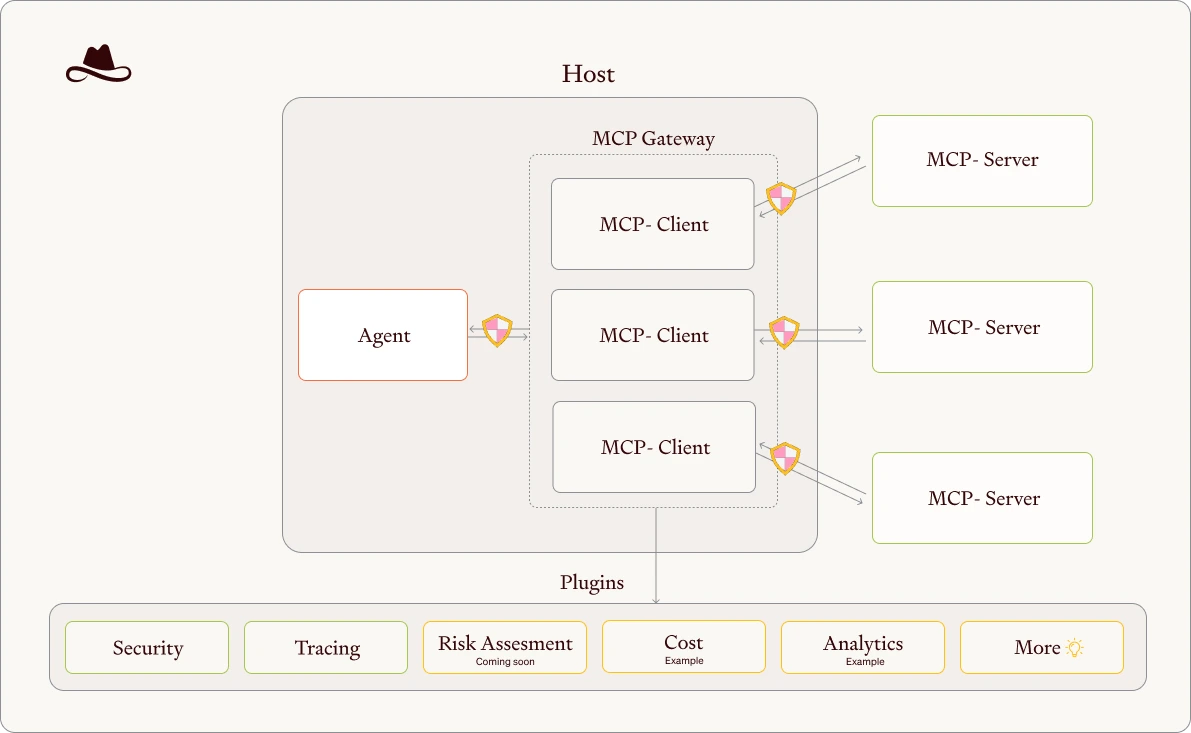

- Lasso MCP Gateway: A security-focused gateway with support for token masking, PII detection, and prompt injection filters. It uses a plugin system to apply different protections.

- WunderGraph MCP Gateway: Designed for GraphQL-based systems. It includes schema-based discovery and role-based access control.

- Zuplo Remote MCP Servers: Turns any API into an MCP server. Offers logging, threat filters, and options for production deployments.

- kgateway: A simple gateway that supports basic authorization and is easy to set up.

- IBM MCP Gateway: Built on FastAPI for large-scale enterprise use.

- Unla by AmoyLab: A basic orchestrator maintained by the community, suited for testing and development.

In this guide, we will use Lasso’s open source gateway. It provides the features we need and is easy to extend. It offloads authentication and authorization, applies security filters like token masking and Personally Identifiable Information (PII) detection, and routes requests to the appropriate backend MCP server.

In addition to these controls, Lasso Gateway also provides centralized monitoring and observability. With plugins like Xetrack, teams can track which tools are used, detect security events, and monitor system performance, all from a single log stream. The inbuilt observability makes it easier to debug workflows, audit activity, and understand how tools are being used in practice.

We’ll build an MCP Gateway that connects four tools:

- Kubernetes diagnostics server

- GitHub server for code and issue tracking

- Context7 server for documentation lookup

- Reasoning server for structured planning

All requests will go through one gateway. You’ll secure it using plugins that block secrets, detect sensitive data, and stop risky behavior.

Prerequisites

Before implementing any MCP gateway, you need a foundation of working MCP servers and a compatible client environment. So, make sure the following setup is ready:

- Multiple MCP Servers

- K8s-diagnostics: Detecting cluster issues

- Github: Finding commits/issues and creating new ones

- Context7: Looking up documentation and runbooks

- Sequential-thinking: Structuring a remediation plan

- Claude Desktop or a compatible MCP client to interact with the gateway

- Python 3.x: Required to run the Lasso MCP Gateway locally.

(We suggest using a virtual environment to avoid dependency conflicts.) - API Keys: GitHub token for GitHub MCP server.

- Kubeconfig: Required by the Kubernetes diagnostics server to inspect your local or remote cluster.

Setup instructions

-

Install gateway: The installation process is straightforward, but we’ll also set up optional dependencies that enable advanced features:

pip install mcp-gatewayThis installs the base gateway with basic functionality. For production deployments, you’ll likely want additional security plugins.

-

Configure backend servers: Configuration determines which MCP servers your gateway will orchestrate and what security policies apply. This step is crucial because it defines your entire MCP ecosystem:

Create a file that lists all the MCP servers (mcp.json) you want to use. In this example, we’ll include the GitHub, Kubernetes diagnostics, Context7, and Sequential Thinking servers.

{ "mcpServers": { "mcp-gateway": { "command": "/Users/<your_path_to_python>/venv/bin/python", "args": [ "-m", "mcp_gateway.server", "--mcp-json-path", "/Users/<your_path_to_mpc.json>/mcp.json", "--plugin", "basic" ], "servers": { "k8s-diagnostics": { "command": "/Users/<your_path_to_k8s-diagnostics-mcp-server>k8s-diagnostics-mcp-server/bin/k8s-diagnostics-mcp-server", "args": [], "env": { "KUBECONFIG": "/Users/<your_path_to_kubeconfig>/.kube/config" } }, "github": { "command": "/Users/<your_path_to_github-mcp-server>/github-mcp-server/bin/github-mcp-server", "args": [], "env": { "GITHUB_TOKEN": "<your_github_token>" } }, "context7": { "command": "npx", "args": ["-y", "@upstash/context7-mcp"] }, "sequential-thinking": { "command": "npx", "args": [ "-y", "@modelcontextprotocol/server-sequential-thinking" ] } } } } } -

Enable security plugins: The MCP Gateway significantly enhances security by centralizing controls and supports various security plugins. These plugins offer capabilities such as token and secret masking, PII detection, and advanced threat analysis like prompt injection protection. These features prevent sensitive data exposure and mitigate common AI-specific threats.

Basic security plugin - Essential token protection with 12 built-in patterns:

mcp-gateway --mcp-json-path ~/mcp.json -p basicOutput: “BasicGuardrailPlugin loaded. Secret patterns enabled: 12”

Verification: Check logs for successful pattern loading

Advanced PII detection - Comprehensive data protection using Microsoft Presidio:# First install dependencies pip install mcp-gateway[presidio] # Then run with both plugins mcp-gateway --mcp-json-path ~/mcp.json -p basic -p presidioPurpose: Detects names, emails, phone numbers, SSNs, and other PII

Verification: Should not show “Presidio libraries not found” warningEnterprise security - Complete threat protection with prompt injection detection:

# Set API key first export LASSO_API_KEY="your-api-key" # Run with enterprise protection mcp-gateway --mcp-json-path ~/mcp.json -p basic -p lassoPurpose: Advanced AI threat analysis and prompt injection protection Verification: Should not show “Lasso API key not provided” warning

-

Start the gateway: Once configured, the gateway will activate your security policies and begin proxying MCP requests. The startup process validates all configurations and establishes connections to backend servers:

mcp-gateway --mcp-json-path ~/mcp.json -p basicOutput:

2025-06-25 20:49:11,384 - mcp_gateway.server - INFO - Starting MCP gateway server directly... 2025-06-25 20:49:11,386 - mcp_gateway.server - INFO - MCP gateway lifespan starting... 2025-06-25 20:49:11,386 - mcp_gateway.plugins.manager - INFO - Registered plugin: BasicGuardrailPlugin (type: guardrail) 2025-06-25 20:49:11,386 - mcp_gateway.plugins.manager - INFO - Registered plugin: LassoGuardrailPlugin (type: guardrail) . . . 2025-06-25 20:49:12,323 - mcp_gateway.server - INFO - Dynamic registration process complete. Attempted to register 18 tools and 0 prompts with FastMCP.For troubleshooting during initial setup, debug logging reveals configuration issues and connection problems:

LOGLEVEL=DEBUG mcp-gateway --mcp-json-path ~/mcp.json -p basic -p presidio

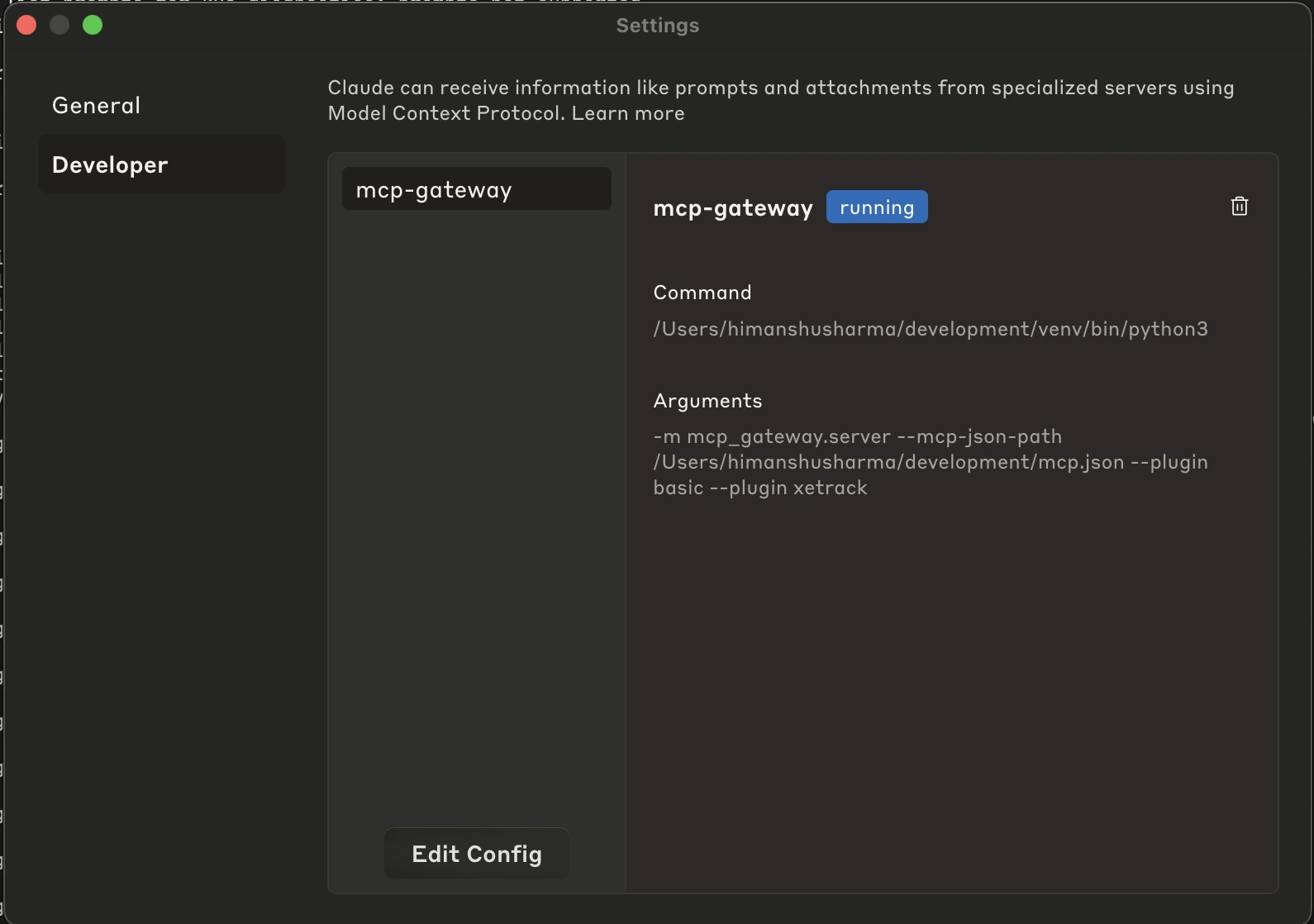

Integration with Claude Desktop

Claude Desktop integration requires updating your Claude configuration to use the gateway instead of direct MCP server connections. This centralizes all MCP traffic through your security policies:

Update your Claude Desktop with the same configuration as we did recently for mcp.json by editing the configuration file (e.g., ~/Library/Application Support/Claude/claude_desktop_config.json on macOS):

{

"mcpServers": {

"mcp-gateway": {

"command": "/Users/<your_path_to_python>/bin/python",

"args": [

"-m",

"mcp_gateway.server",

"--mcp-json-path",

"/Users/<your_path_to_mpc.json>/mcp.json",

"--plugin",

"basic",

"--plugin",

"xetrack"

],

"servers": {

"k8s-diagnostics": {

"command": "/Users/<your_path_to_k8s-diagnostics-mcp-server>k8s-diagnostics-mcp-server/bin/k8s-diagnostics-mcp-server",

"args": [],

"env": {

"KUBECONFIG": "/Users/<your_path_to_kubeconfig>/.kube/config"

}

},

"github": {

"command": "/Users/<your_path_to_github-mcp-server>/github-mcp-server/bin/github-mcp-server",

"args": [],

"env": {

"GITHUB_TOKEN": "<your_github_token>"

}

},

"context7": {

"command": "npx",

"args": ["-y", "@upstash/context7-mcp"]

},

"sequential-thinking": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-sequential-thinking"

]

}

}

}

}

}

This configuration replaces individual MCP server entries with a single gateway entry that manages all backend servers.

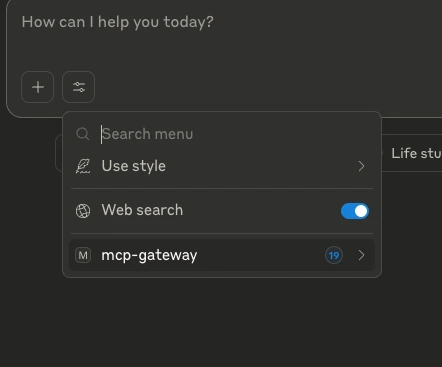

Restart Claude Desktop: For changes to take effect. You can also go back to Developer Settings of Claude Desktop to check the configuration:

Let’s now go ahead and test it in a real-world scenario.

Real-world productivity scenario

Our MCP Gateway set up is complete with four integrated servers (Kubernetes diagnostics, GitHub, Context7, and Sequential Thinking) all secured behind a single gateway. Let’s see how this unified architecture delivers tangible benefits in a real-world incident response scenario.

Instead of the traditional fragmented workflow where teams jump between kubectl commands, GitHub repositories, documentation systems, and monitoring dashboards, our gateway setup enables a streamlined conversational interface that connects to all operational systems securely and guides structured resolution.

The problem: traditional incident response complexity

In any growing engineering organization, incidents are inevitable, services go down, containers fail to start, and root causes aren’t always obvious. Teams often scramble to triage the problem, switching between multiple tools and interfaces to piece together what happened.

This fragmented workflow not only slows down response time but also introduces critical risks:

- Partial visibility across infrastructure, code, and documentation

- Inconsistent decision-making under pressure

- Missed steps in the recovery process

- Weak audit trails for compliance and learning

The solution: our integrated gateway in action

Using the exact setup we configured earlier, our MCP Gateway with K8s diagnostics, GitHub, Context7, and Sequential Thinking servers, let’s walk through how a complete incident response workflow becomes streamlined into a single conversational interface.

Scenario setup: the failure chain

Let’s walk through an incident scenario using four MCP servers routed through a single secure MCP Gateway:

- An alert fires from the cluster, something is failing in the demo-app namespace

- We diagnose the issue using our Kubernetes diagnostics server through the gateway

- We check recent GitHub activity via the GitHub server to correlate with deployments

- We consult our incident response runbook stored in Context7

- We use Sequential Thinking to generate a structured action plan

- We document the entire incident back in GitHub, all through the same secure gateway

Let me create a demo scenario with a problematic pod:

➜ ~ kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default test-container 0/1 CrashLoopBackOff 736 (3m18s ago) 105d

demo-app broken-pod 0/1 Completed 0 112m

Workflow in practice

Here’s how the flow looks with all the tools we have integrated:

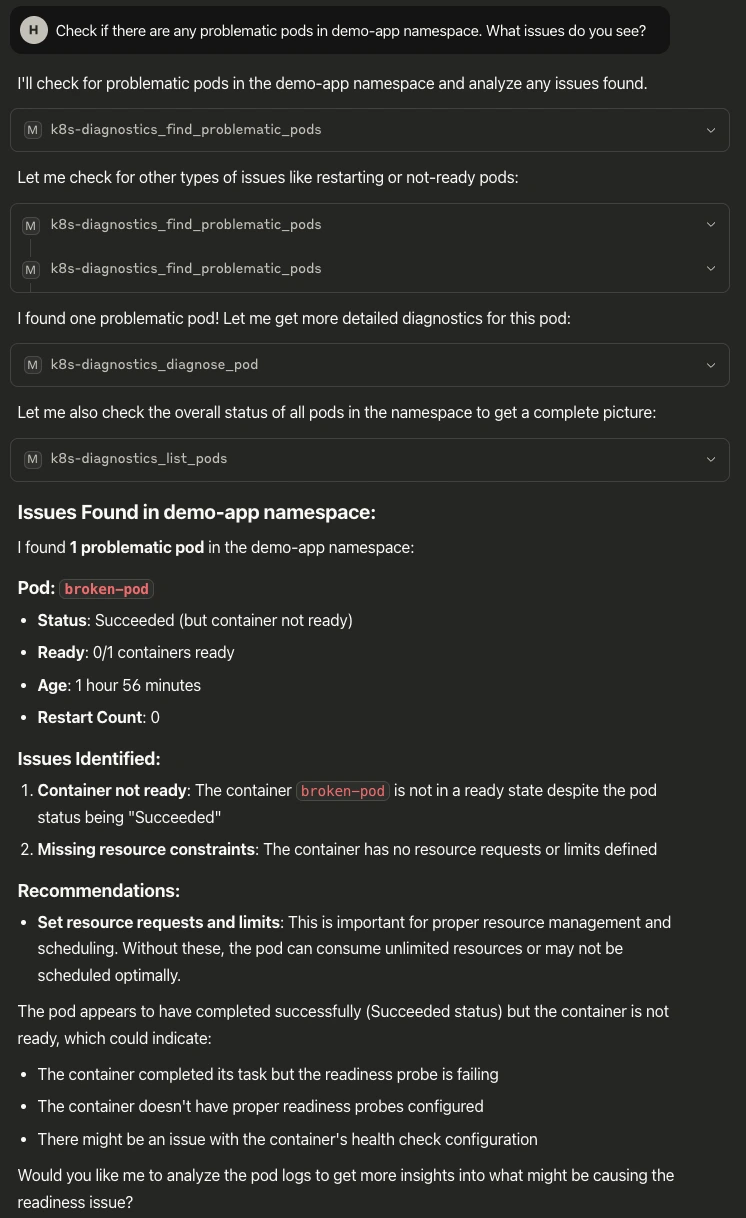

-

Discovery: k8s-diagnostics identifies the broken pod with an error.

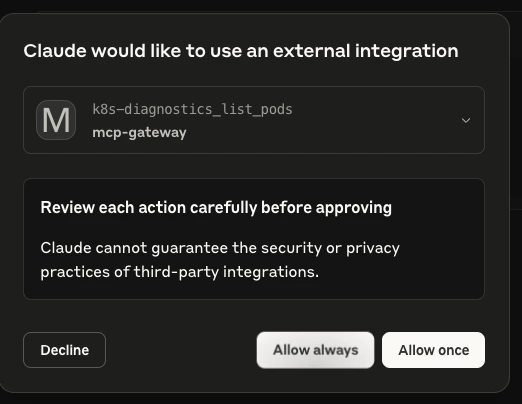

Prompt: “Check if there are any problematic pods in the <YOUR_NAMESPACE> namespace. What issues do you see?”

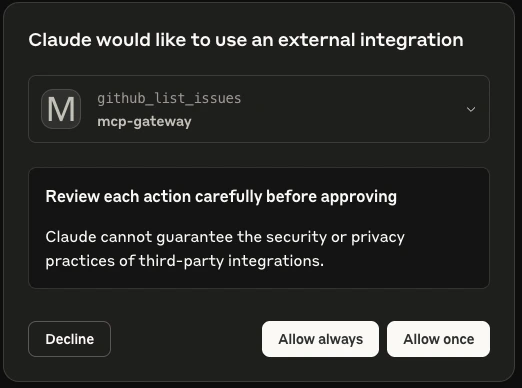

Note: Claude’s desktop will ask for access to perform all these tasks.

-

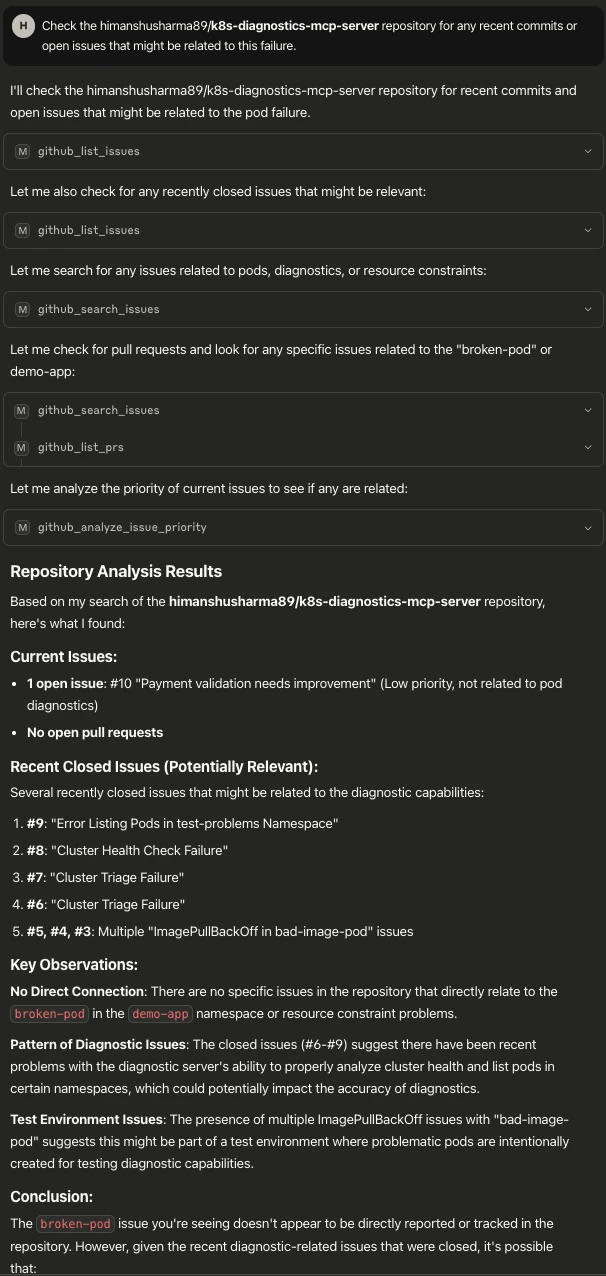

Context gathering: GitHub MCP shows recent commit and open issues Prompt: “Check the <YOUR_REPO> repository for any recent commits or open issues that might be related to this failure.”

-

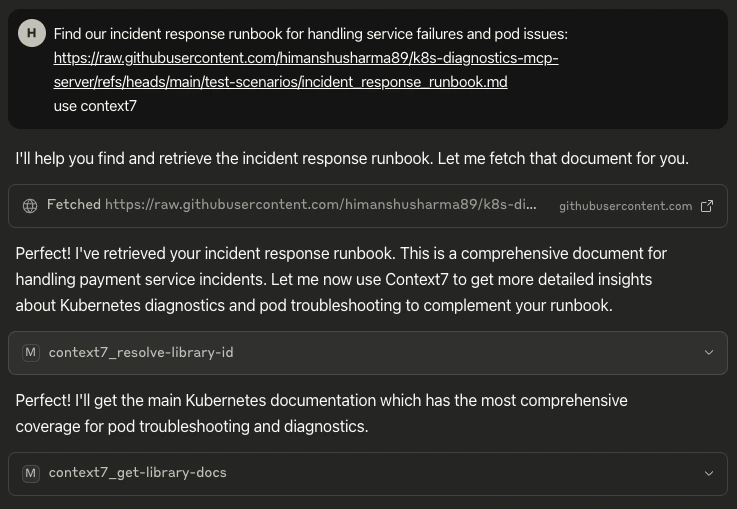

Documentation lookup: Context7 retrieves the uploaded runbook Prompt: “Find our incident response runbook for handling service failures and pod issues. Use context7”

-

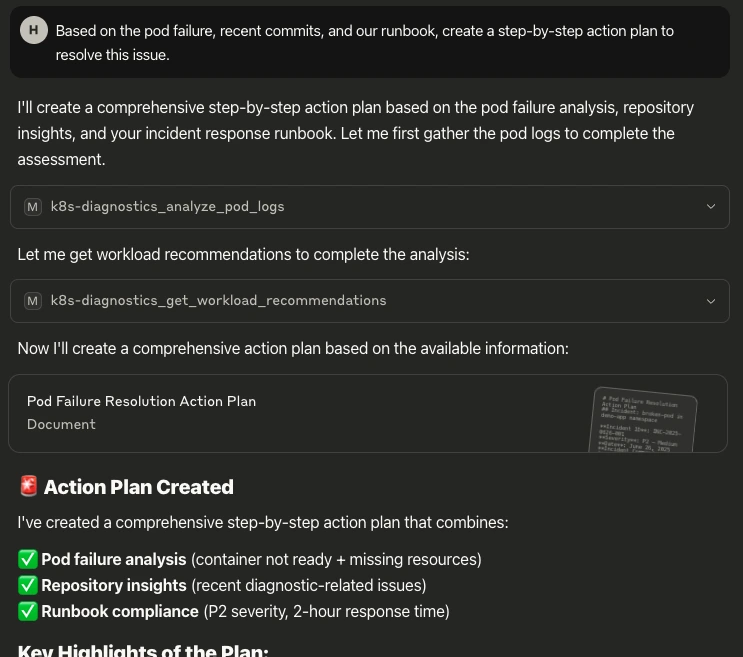

Structured problem solving: A structured plan action plan is built using k8s-diagnostics Prompt: “Based on the pod failure, recent commits, and our runbook, create a step-by-step action plan to resolve this issue.”

-

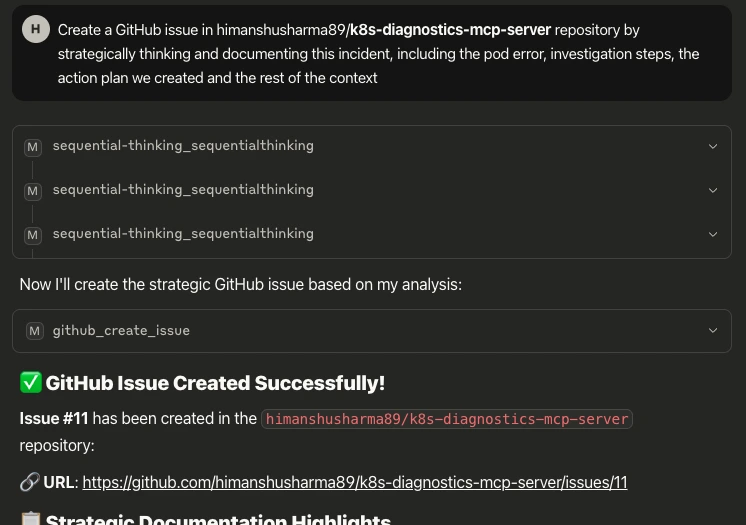

Action taking: Sequential Thinking and GitHub MCP creates a comprehensive incident issue Prompt: “Create a GitHub issue in <YOUR_REPO> repository documenting this incident, including the pod error, investigation steps, and the action plan we created.”

This incident response workflow showcases how the MCP Gateway acts as a unified control plane for real-world debugging, seamlessly integrating diagnostics, documentation, code activity, and structured reasoning into a single, conversational interface.

The future of MCP gateway integration

The MCP gateway ecosystem continues evolving rapidly, driven by both technological advances and growing enterprise adoption requirements.

Emerging patterns

Several significant trends are shaping the next generation of gateway capabilities.

- Specialized gateway plugins are emerging for domain-specific needs in industries like healthcare, finance, and legal, where compliance and security requirements demand tailored solutions.

- AI-powered routing decisions represent a fascinating development where machine learning algorithms optimize tool selection and routing based on historical performance and current context.

- Federated gateway architectures enable multiple gateways to work together across organizational boundaries, supporting complex enterprise scenarios.

- Complex tool orchestration capabilities are evolving to support automated workflows that span multiple tools and decision points without human intervention.

Community ecosystem

The community building around MCP gateways reflects the technology’s maturation and widespread adoption.

- A plugin marketplace is developing as a centralized repository for gateway plugins and extensions, making it easier for teams to find and share solutions.

- Configuration template sharing provides pre-built configurations for common use cases, accelerating implementation timelines.

- Best practice documentation grows through community-driven efforts, capturing lessons learned from real-world deployments.

- Monitoring dashboard templates offer reusable monitoring and alerting configurations that teams can adapt to their specific needs.

Final words: Your centralized AI productivity hub

The shift from managing standalone MCP servers to operating through a centralized gateway marks a deeper transformation in how teams enable AI-driven productivity. The MCP Gateway unifies integration, security, and observability into a single, manageable surface, reducing operational complexity and streamlining development workflows. Instead of juggling tool-specific configurations, teams can focus on building smarter automation and scalable systems with consistent policies and centralized oversight.

Start small, connect the tools you use most, observe their usage, and grow based on real demand. The open source implementation gives you everything needed to begin, with a growing community to learn from and contribute to.

The MCP Gateway isn’t just a technical improvement; it’s a path toward building resilient, secure, and intelligent AI infrastructure at scale. To learn more about the latest in AI, watch our AI-Xplore webinars. If you need help building an AI cloud, our AI experts can help you.

If you found this guide useful and want to discuss more about MCP and AI agents, feel free to connect with me on LinkedIn.

Stay updated with latest in AI and Cloud Native tech

We hate 😖 spam as much as you do! You're in a safe company.

Only delivering solid AI & cloud native content.